Big Data & Analytics (Version 2) – IoT Fundamentals: Big Data and Analytics End of Course Assessment Final Exam Answers Full Questions

1. A patient who lives in Northern Canada has an MRI taken. The results of the medical procedure are immediately transmitted to a specialist in Toronto who will review the findings. Which three characteristics would best describe the patient data being transmitted? (Choose three.)

- private

- unstructured

- random

- structured

- in motion

- at rest

Explanation: Electronic medical information is private personal information. The digital results of tests such as x-rays, MRIs, and ultrasounds do not have a format of fixed fields so they are considered unstructured. Because the information is transmitted from one place to another for review, it would be in motion. Data is at rest once it is stored in a data center.

2. Which three key words are used to describe the difference between Big Data and data? (Choose three.)

- volume

- vigor

- variety

- value

- velocity

- vibrancy

Explanation: Three key words can help distinguish data from Big Data:

Volume – describes the amount of Big Data being transported and stored

Velocity – describes the rapid rate at which Big Data is moving

Variety – describes the type of Big Data, which is rarely in a state that is perfectly ready for processing and analysis

3. What are three types of structured data? (Choose three.)

- e-commerce user accounts

- spreadsheet data

- blogs

- white papers

- newspaper articles

- data in relational databases

Explanation: Structured data is entered and maintained in fixed fields within a file or record, such as data found in relational databases and spreadsheets. Structured data entry requires a certain format to minimize errors and make it easier for computer interpretation.

4. What are two plain-text file types that are compatible with numerous applications and use a standard method of representing data records? (Choose two.)

Explanation: As data is collected from varying sources and in varying formats, it is beneficial to utilize specific file types that allow easy conversion and universal application support. CSV, JSON, and XML are plain text file types that allow for collecting and analyzing of data in a format that is easily compatible and applicable for analysis.

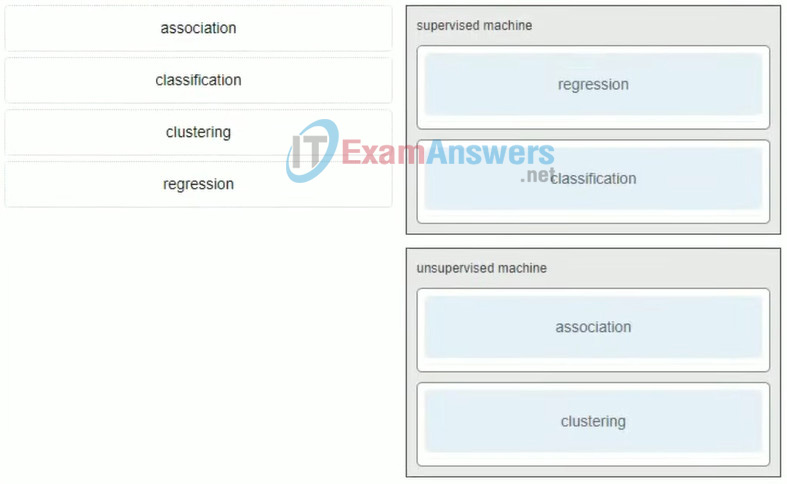

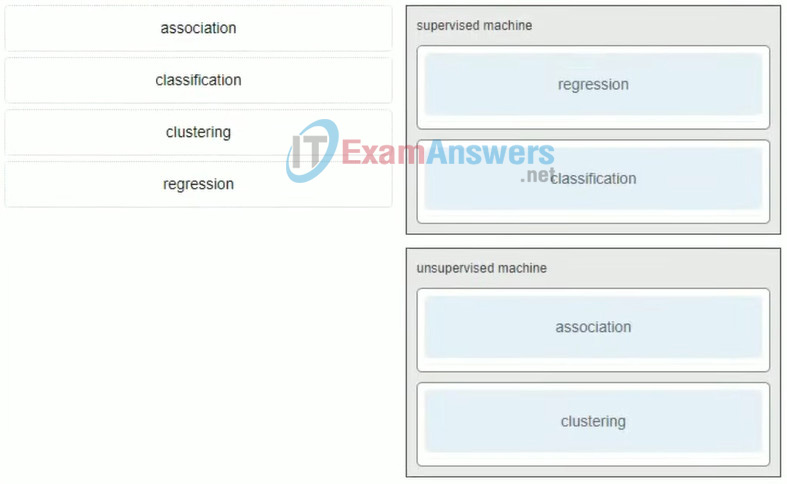

5. Match the algorithm to the type of learning algorithm.

6. Which two tasks are part of the transforming data process? (Choose two.)

- creating visual representations of the data

- collecting data required to perform the analysis

- joining data from multiple sources

- using rules to modify the source data to the type of data needed for a target database

- presenting the knowledge gained from the data

Explanation: Transforming data is the process of modifying data into a usable form. This includes tasks such as aggregating data and sorting it. Collecting data is the process of extracting data. Creating visual representations of data and presenting the knowledge gained from the data are examples of the final steps that are used in data analysis.

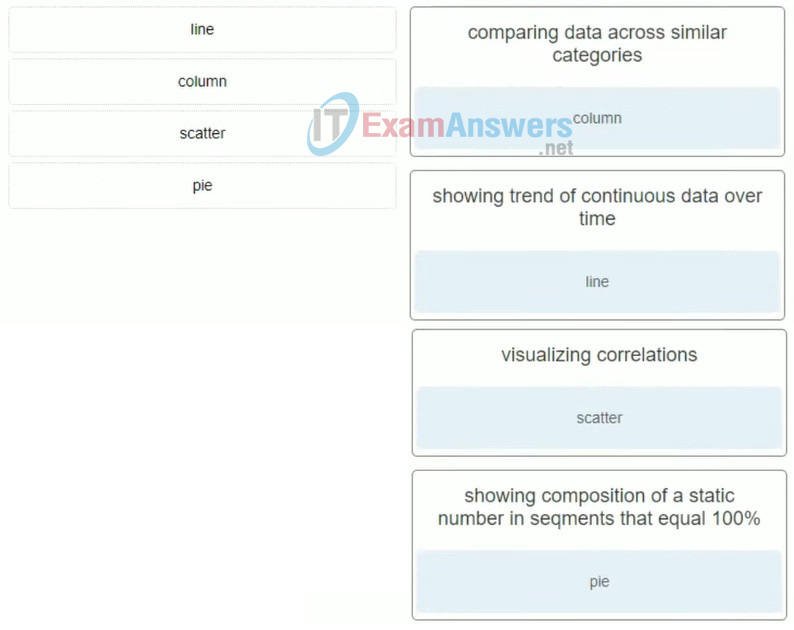

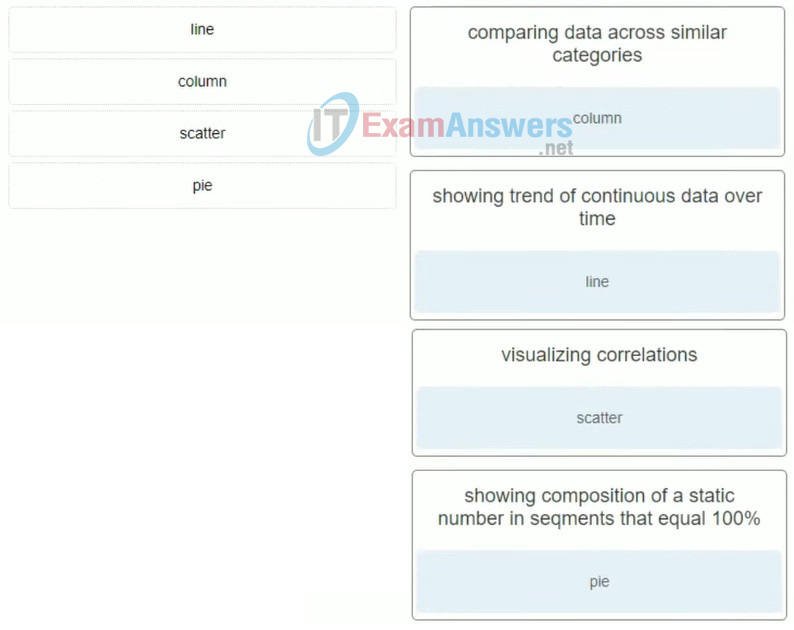

7. Match the type of chart with the best use.

8. What two benefits are gained when an organization adopts cloud computing and virtualization? (Choose two.)

- elimination of vulnerabilities to cyber attacks

- provides a “pay-as-you-go” model, allowing organizations to treat computing and storage expenses as a utility

- enables rapid responses to increasing data volume requirements

- distributed processing of large data sets in the size of terabytes

- increases the dependance on onsite IT resources

Explanation: Organizations can use virtualization to consolidate the number of required servers by running many virtual servers on a single physical server. Cloud computing allows organizations to scale their solutions as required and to pay only for the resources they require.

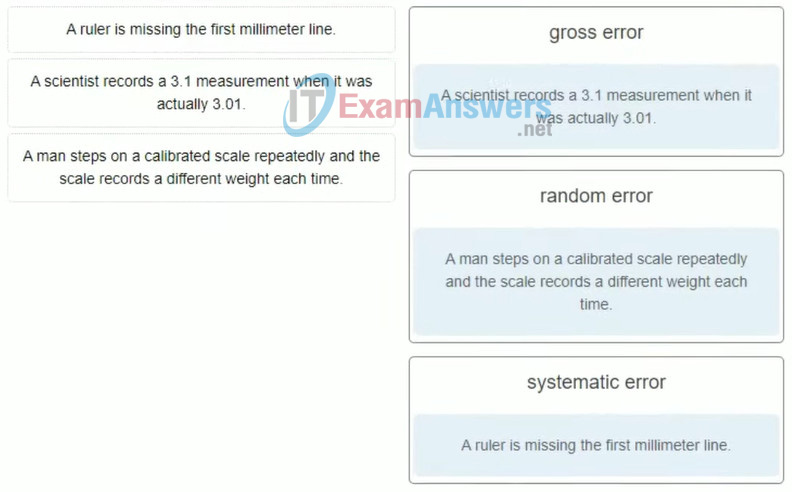

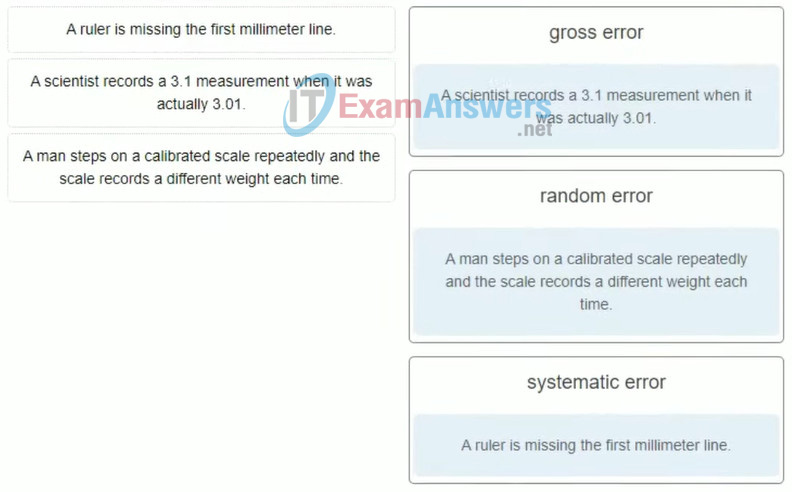

9. Match the type of error to the corresponding source of the error.

10. What are two features supported by NoSQL databases? (Choose two.)

- establishing relationships within stored data

- relying on the relational database approach of linked tables

- importing unstructured data

- organizing data in columns, tables, and rows

- using the key-value storing approach

Explanation: NoSQL databases can use a key-value pair approach to store data. A NoSQL database can import unstructured data. Organizing data in columns, tables, and rows; establishing relationships within the stored data. NoSQL does not rely on the relational database approach of linked tables.

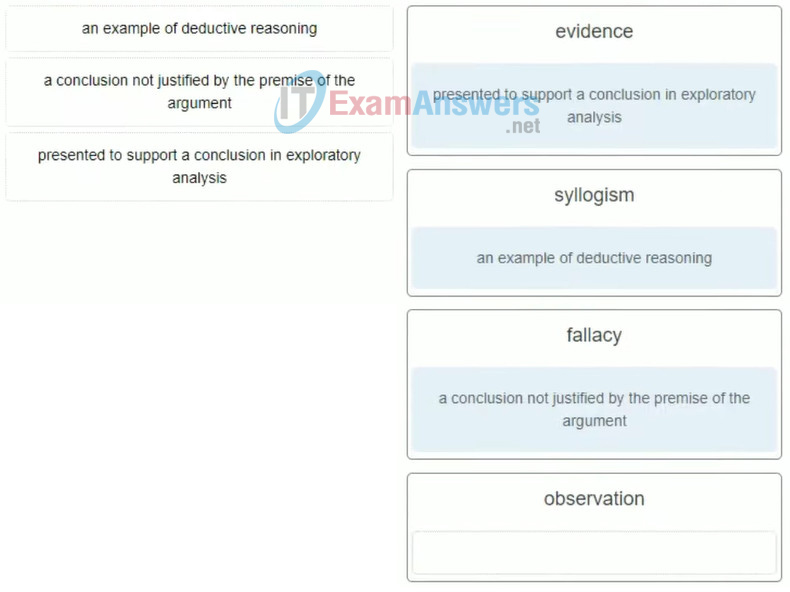

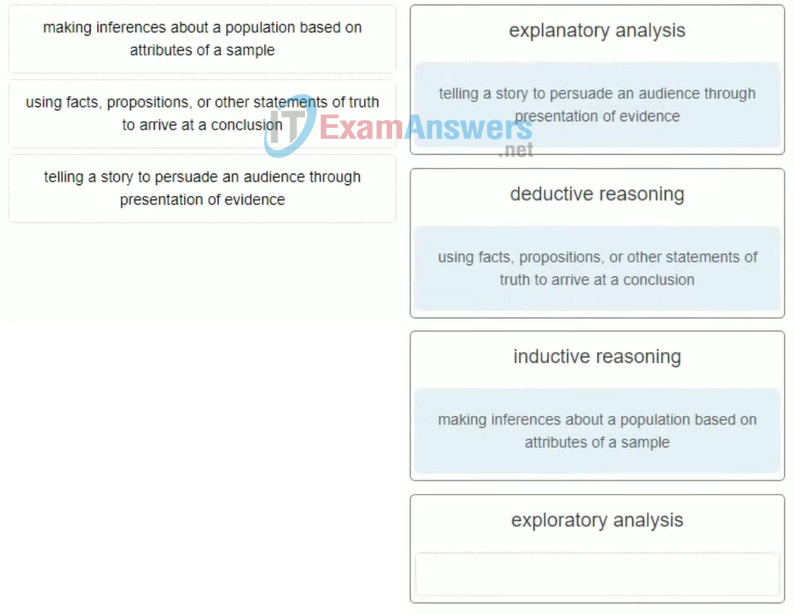

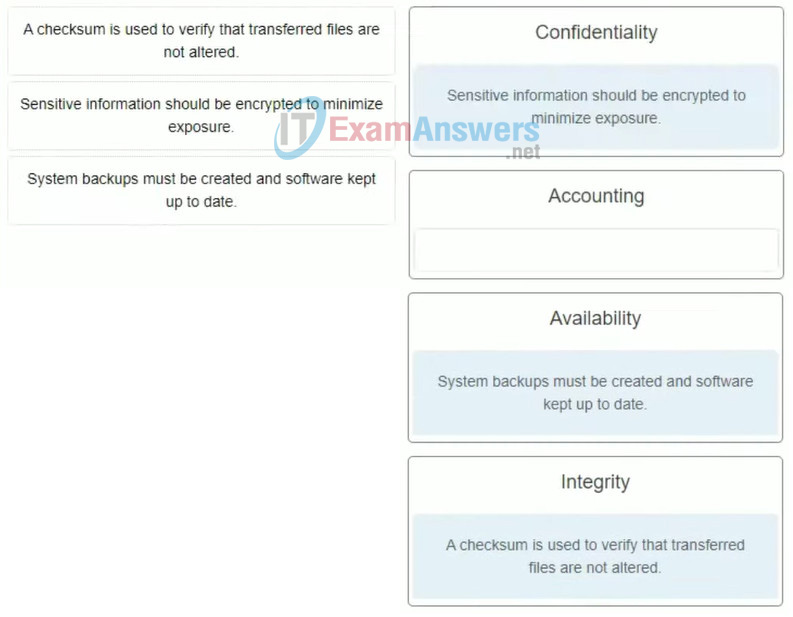

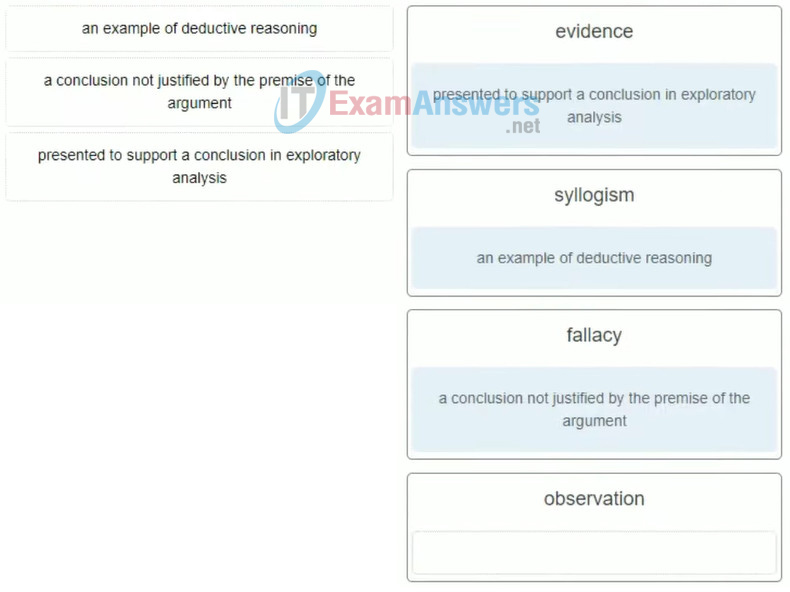

11. Match the terms to the definition. (Not all options are used.)

12. What are two advantages of using CFS over HDFS? (Choose two.)

- low-cost storage solution

- specialized hardware

- ability to run a single database across multiple data centers

- automatic failover of nodes, clusters, and data centers

- master-slave architecture

Explanation: Some of the benefits of using CFS over HDFS are are follows:

Better availability – CFS does not require shared storage solutions.

Basic hardware support – No special servers are needed and no special network devices are needed for CFS.

Data integration – All data that is written to CFS is replicated to both analytics and search nodes.

Automatic failover – As with availability, failover is automatic because of replication.

Easier deployment – Clusters are easy to setup and can be running in a matter of minutes. CFS does not require complicated storage requirements or master-slave configurations.

Supports multiple data centres – CFS can run a single database across multiple data centers.

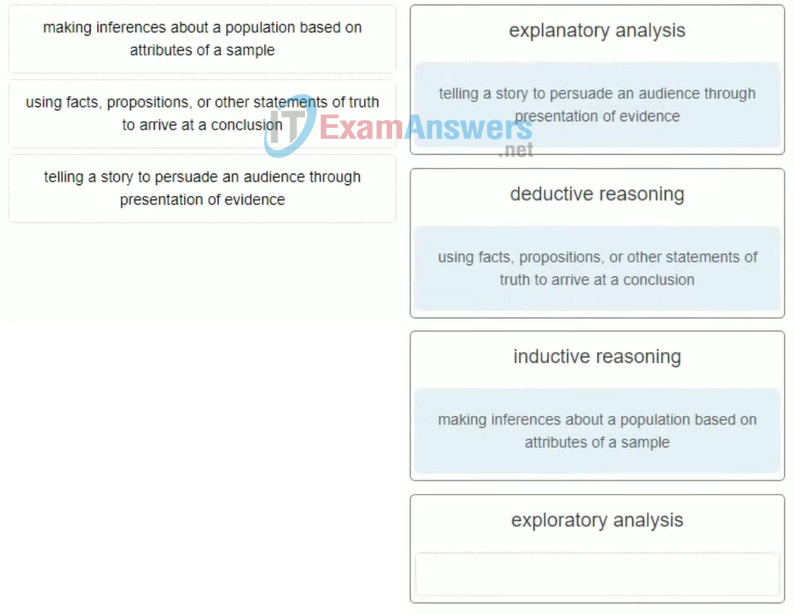

13. Match each term to the correct definition. (Not all options are used.)

14. With the number of sensors and other end devices growing exponentially, which type of device is increasingly used to better manage Internet traffic for systems that are in motion?

- proxy servers

- cellular towers

- mobile routers

- Wi-Fi access points

Explanation: The rapid increase of devices in the IoT is one of the primary reasons for the exponential growth in data generation. With the number of sensors and other end devices growing exponentially, mobile routers are increasingly used to better manage Internet traffic for systems that are in motion.

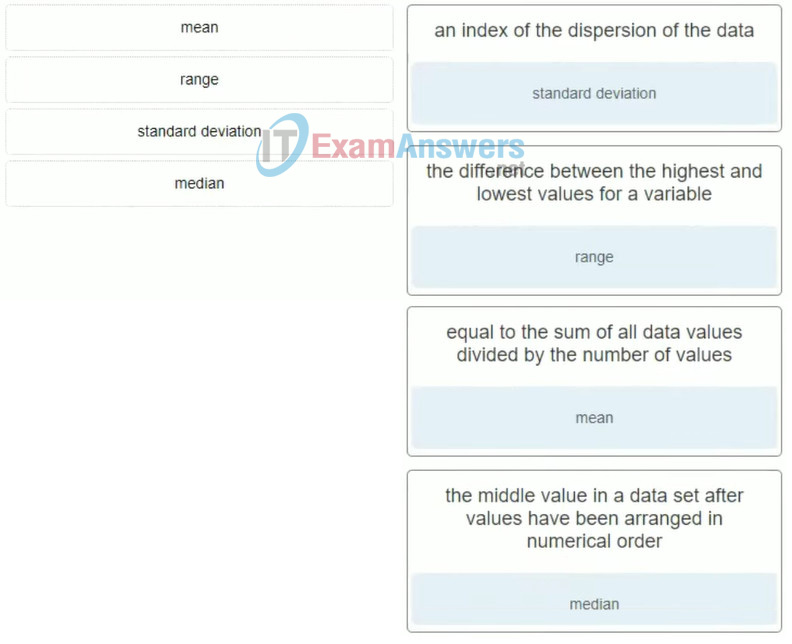

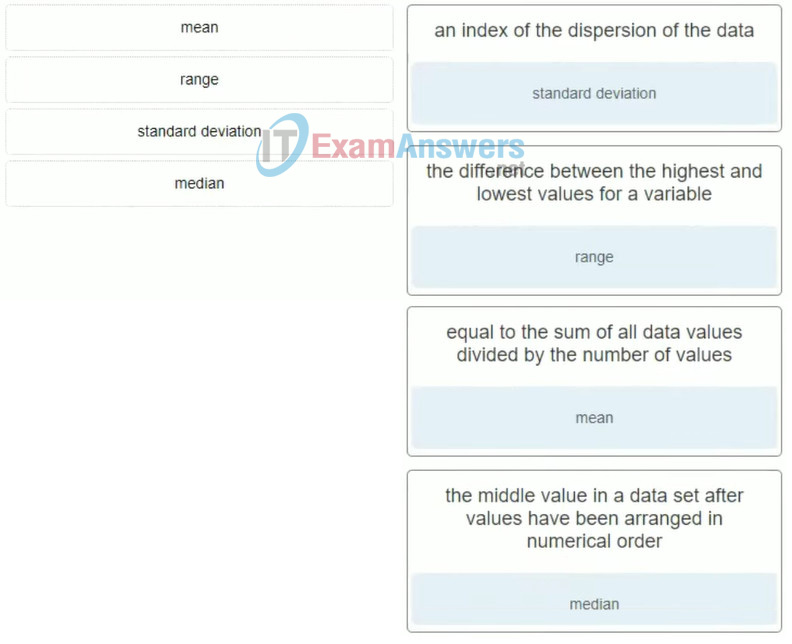

15. Match the statistical term with the description.

16. Which type of information supports managerial analysis in determining whether the company should expand its manufacturing facility?

- transactional

- analytical

- comparative

- capital

Explanation: The two primary types of business information useful to a company are transactional information and analytical information. Transactional information is captured and stored as events happen. Transactional information can be used to analyze daily sales reports and production schedules to determine how much inventory to carry. Analytical information supports managerial analysis tasks like determining whether the organization should build a new manufacturing plant or hire additional sales personnel.

17. What networking technology is used when a company with multiple locations requires data and analysis available close to their network edge?

- fog computing

- Hadoop

- NoSQL

- virtualization

Explanation: Fog computing provides data, compute, storage, and application services to end-users. Fog characteristics include proximity to end-users, dense geographical distribution, and support for mobility. Services are hosted at the network edge or even on end devices such as set-top-boxes or access points.

18. How is the Big Data infrastructure different from the traditional data infrastructure?

- Big Data platforms distribuite data on several computing and storage nodes.

- Security is integrated in all components associated with Big Data.

- Big Data involves fewer people within the organization that can access the data.

- The Big Data infrastructure requires proprietary products and protocols to implement.

Explanation: In the Big Data infrastructure, applications, logs, event data, sensor data, mobility data, social media, and stream data could all provide data into the Big Data infrastructure that could involve data centers, NoSQL, traditional database servers, storage, and Hadoop-based technology.

19. What is an example of a relational database?

- Excel spreadsheet

- Hadoop

- Visual Network Index

- SQL server

Explanation: Two popular relational database management systems are Oracle and SQL.

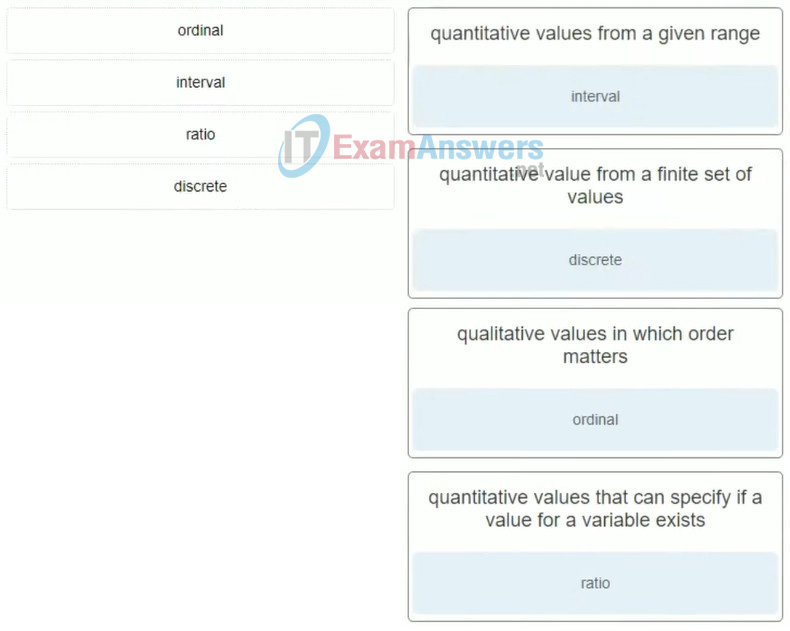

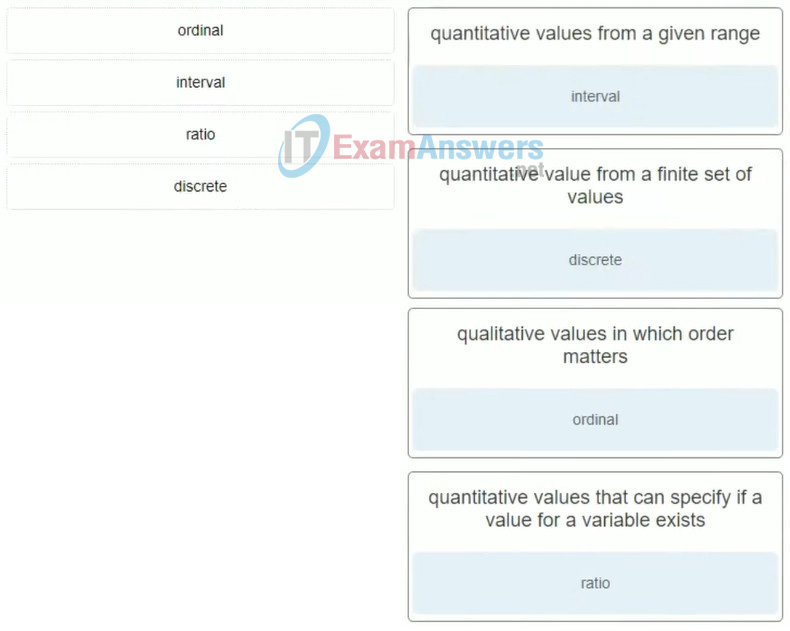

20. Match the variable with the description.

21. What is a purpose of descriptive statistics?

- to compare groups of data sets

- to make predictions about other values

- to summarize findings within a data set

- to make generalizations about a population

Explanation: There are two types of statistics used in the analysis of data: descriptive and inferential. Descriptive statistics are used to describe or summarize values in a data set. Inferential statistics are used to make predictions about data.

22. Five hundred people are working in an office. For a study, which term describes a group of 50 people that have been chosen to represent the entire office?

- category

- sample

- cluster

- group

Explanation: A population shares a common set of characteristics. Because it is typically not feasible to study an entire population, a representative sample of the population, called a sample, is chosen for analysis.

23. Which functionality does pandas provide to a Python environment?

- a set of APIs to allow sensors to send data to a Raspberry Pi

- an enhanced chip for processing graphical information

- a set of data structures and tools for data analysis

- an algorithm to generate random numbers

Explanation: Pandas is an open source library with high-performance data structures and tools for analysis of large data sets.

24. A data analyst performs a correlation analysis between two quantities. The result of the analysis is an r value of 0.9. What does this mean?

- The two variables have almost the same values.

- One variable keeps its value at 90% of the other variable.

- When one variable increases its value, the other variable decreases its value.

- When one variable increases its value, the other variable increases its value in a very similar fashion.

Explanation: The commonly used correlation coefficient, Pearson r, (or r value), is a quantity that is expressed as a value between -1 and 1. Positive values indicate a positive relationship between the changes in two quantities. Negative values indicate an inverse relationship. The magnitude of either the positive or negative values indicates the degree of correlation. The closer the value is to 1 or -1, the stronger the relationship.

25. A data analyst is processing a data set with pandas and notices a NaT. Which data type is expected for the missing data?

- string

- timestamp

- object

- integer

- float

Explanation: In a pandas data set, NaN is used to indicate an undefined string, integer, or float. NaT is used to indicate a missing timestamp.

26. Which type of learning algorithm can predict the value of a variable of a loan interest rate based on the value of other variables?

- classification

- regression

- clustering

- association

Explanation: An example of how a regression algorithm might be used is to predict the cost of a house by looking at variables such as crime rate, average income level in the neighborhood, and how far the house is from a school.

27. In a regression analysis, which variable is known as the predictor or explanatory variable?

- independent

- first

- prime

- dependent

Explanation: The dependent variable is known as the target or response variable. The independent variable is also known as the predictor or explanatory variable.

28. When you perform an experiment and follow the scientific method, what is the first step that you should take?

- Analyze gathered data.

- Ask questions about an observation.

- Form a hypothesis.

- Perform research.

Explanation: The scientific method is commonly used in scientific discovery and contains the following steps:

Step 1. Ask a question about an observation such as what, when, how, or why.

Step 2. Perform research.

Step 3. Form a hypothesis from this research.

Step 4. Test the hypothesis through experimentation.

Step 5. Analyze the data from the experiments to draw a conclusion.

Step 6. Communicate the results of the process.

29. Which type of validity is being used when a researcher compares the original conclusion against other people in other places at other times?

- construct

- conclusion

- internal

- external

Explanation: Researchers commonly perform verification tests using four types of validity:

Construct validity – Does the study really measure what it claims to measure?

Internal validity – Was the experiment actually designed correctly? Did it include all the steps of the scientific method?

External validity – Can the conclusions apply to other situations or other people in other places at other times? Are there any other casual relationships in the study that might cause the results?

Conclusion validity – Based on the relationships in the data, are the conclusions of the study reasonable?

30. Refer to the exhibit. What type of data exists outside of the decision boundary?

- historical

- big

- normal

- anomalous

Explanation: A scientist must calculate a decision boundary to detect anomalies. Anomalous data points are points that lie beyond the decision boundary sphere.

31. What is a matplotlib module that includes a collection of style functions?

- Pyplot

- Plotly

- CSS

- Jupyter

Explanation: Pyplot is a matplotlib module that includes a collection of style functions. It can be used to create and customize a plot.

32. Which tool is available online and is used to create data visualizations that include API libraries, figure converters, apps, and an open source JavaScript library?

- Plotly

- Jupyter

- Pyplot

- CSS

Explanation: Plotly is an online tool that can be used to quickly generate data visualizations. Plotly offers a variety of resources for data analysts and web developers including API libraries, figure converters, apps for Google Chrome, and an open source JavaScript library.

33. Which services are provided by a private cloud?

- online services to trusted vendors

- multiple internal IT services in an enterprise

- secure communications between sensors and actuators

- encrypted data storage in cloud computing

Explanation: Large enterprises typically have their own data center to manage data storage and data processing needs. The data center can be used to serve internal IT needs. In other words, the data center becomes a private cloud, a cloud computing infrastructure just for internal services.

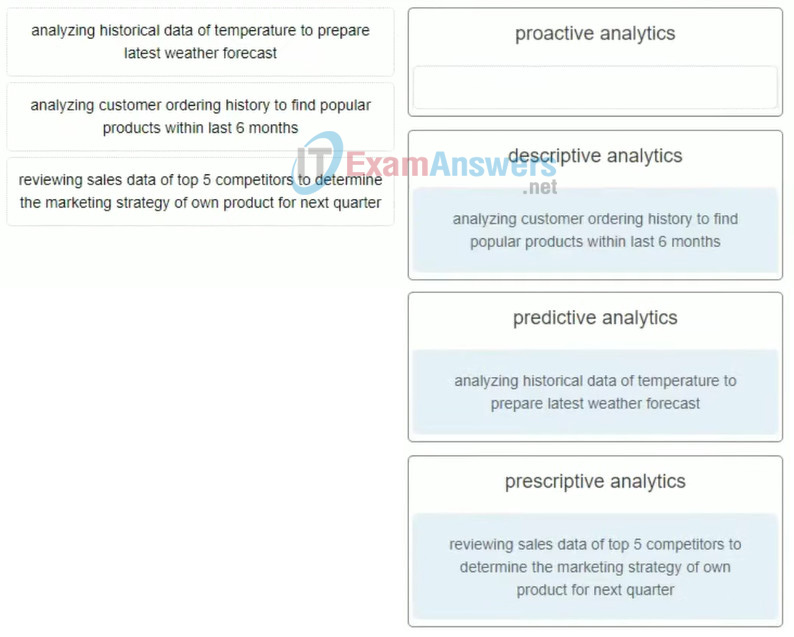

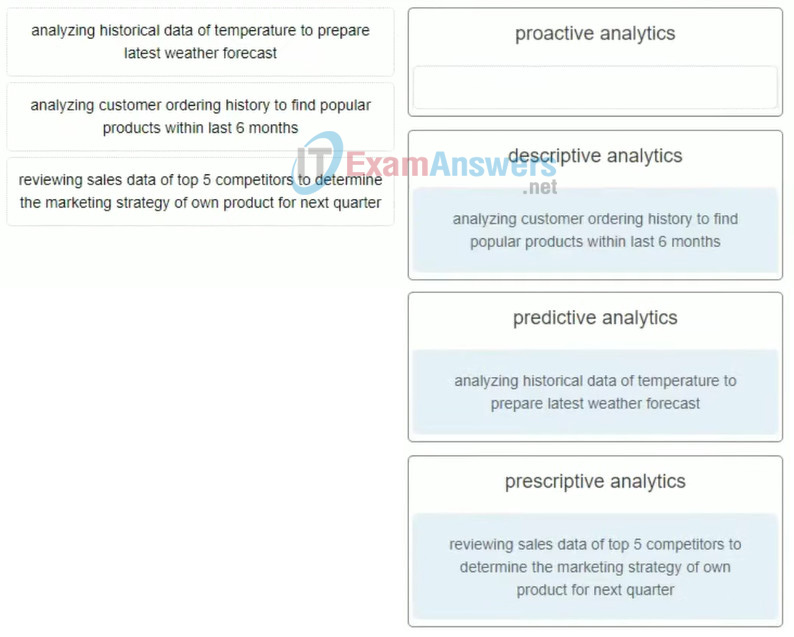

34. Match the task and purpose to the appropriate Big Data analytics method. (Not all options are used.)

Explanation: Data analytics, applied to Big Data, can be classified into three major types:

- Descriptive – provides information about the past state or performance of a person or an organization

- Predictive – attempts to predict the future, based on data and analysis, or what will happen next

- Prescriptive – predicts outcomes and suggests courses of actions that will hold the greatest benefit to an organization

35. Which service is an example of an extension to the cloud computing services defined by the National Institute of Standards and Technology?

Explanation: The National Institute of Standards and Technology (NIST) defines three main cloud computing services, IaaS, PaaS, SaaS, in their Special Publication 800-145. Cloud service providers have extended this model to also provide IT support for each of the cloud computing services (ITaaS).

36. What is the main function of a hypervisor?

- It is used to create and manage multiple VM instances on a host machine.

- It is a device that filters and checks security credentials.

- It is software used to coordinate and prepare data for analysis.

- It is a device that synchronizes a group of sensors.

- It is used by ISPs to monitor cloud computing resources.

Explanation: A hypervisor is a key component of virtualization. A hypervisor is often software-based and is used to create and manage multiple VM instances.

37. Which solution improves the availability of big data applications by keeping frequently requested data in memory for fast access?

- sharding

- load balancing

- distributed databases

- memcaching

Explanation: Maintaining availability is the primary concern for companies working with big data. Some solutions to improve the availability include the following:

Load Balancing – deploying multiple web servers and DNS servers to respond to requests simultaneously

Distributed Databases – improving database access speed and demands

Memcaching – offloading demand on database servers by keeping frequently requested data available in memory for fast access

Sharding – partitioning a large relational database across multiple servers to improve search speed

38. What is the first component in the big data pipeline?

- data processing

- data storage

- data transportation

- data ingestion

Explanation: The three basic components of the big data pipeline are data ingestion, data storage, and data processing or compute.

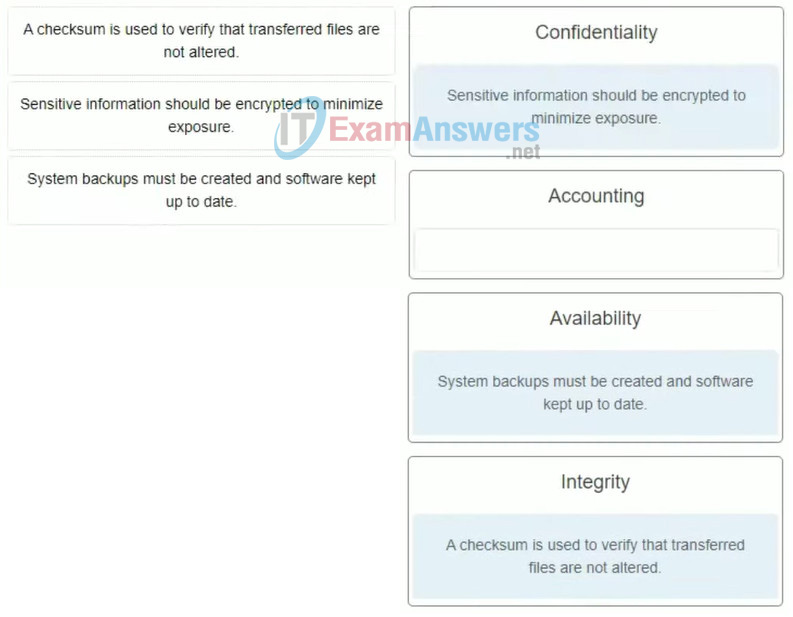

39. Match the description to the correct type of data security. (Not all options are used.)

40. How are file changes handled by Cassandra?

- A new file is created and the old deleted.

- Both versions are maintained.

- Changes are prepended.

- Changes are appended.

Explanation: Cassandra uses sequential read and writes to maintain fast speeds. Instead of appending files, when an addition to a file or a removal of data to a file occurs, a new file is created, and the old file or files are deleted.