9.0 Introduction

9.0.1 Welcome

9.0.1.1 Chapter 9: Implementing the Cisco Adaptive Security Appliance

Transport Layer

Data networks and the Internet support the human network by supplying reliable communication between people. On a single device, people can use multiple applications and services such as email, the web, and instant messaging to send messages or retrieve information. Data from each of these applications is packaged, transported and delivered to the appropriate application on the destination device.

The processes described in the OSI transport layer accept data from the application layer and prepare it for addressing at the network layer. A source computer communicates with a receiving computer to decide how to break up data into segments, how to make sure none of the segments get lost, and how to verify all the segments arrived. When thinking about the transport layer, think of a shipping department preparing a single order of multiple packages for delivery.

9.1 Introduction to the ASA

9.1.1 ASA Solutions

9.1.1.1 ASA Firewall Models

Role of the Transport Layer

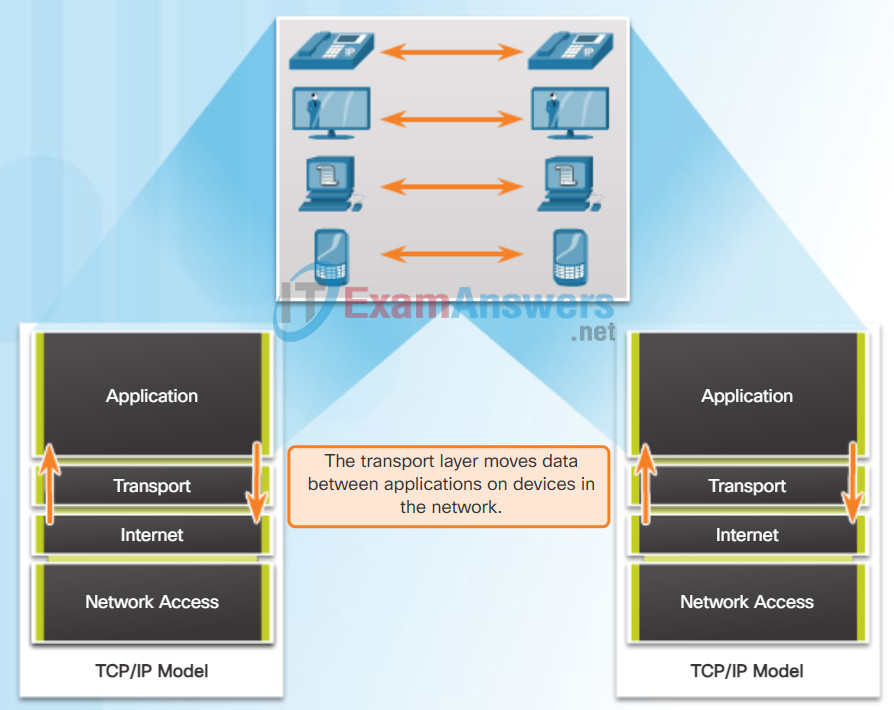

The transport layer is responsible for establishing a temporary communication session between two applications and delivering data between them. An application generates data that is sent from an application on a source host to an application on a destination host. This is without regard to the destination host type, the type of media over which the data must travel, the path taken by the data, the congestion on a link, or the size of the network. As shown in the figure, the transport layer is the link between the application layer and the lower layers that are responsible for network transmission.

Enabling Applications on Devices to Communicate

9.1.1.2 Cisco ASA Next-Generation Firewall Appliances

Tracking Individual Conversations

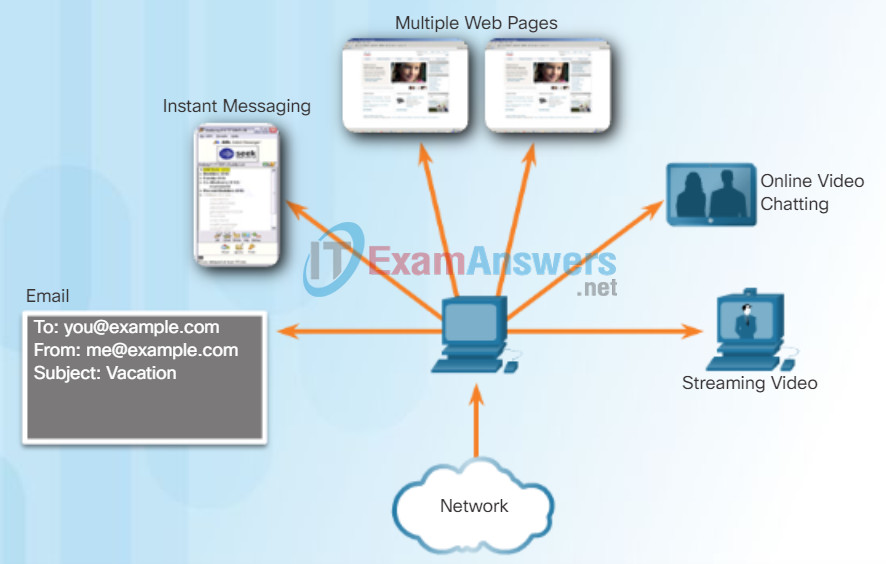

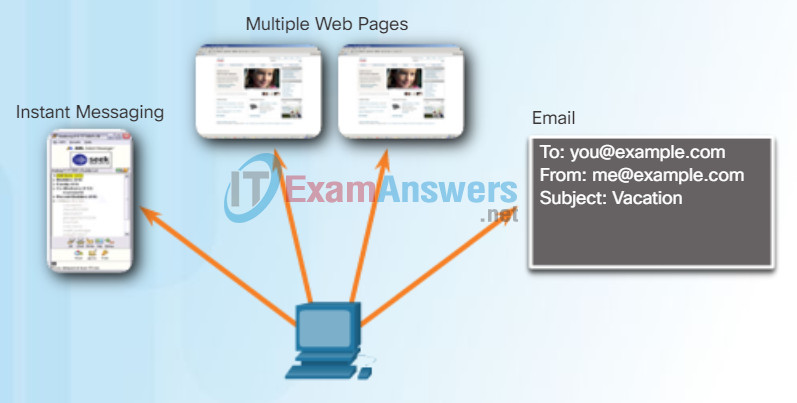

At the transport layer, each set of data flowing between a source application and a destination application is known as a conversation (Figure 1). A host may have multiple applications that are communicating across the network simultaneously. Each of these applications communicates with one or more applications on one or more remote hosts. It is the responsibility of the transport layer to maintain and track these multiple conversations.

Tracking the Conversations

The transport layer tracks each individual conversation flowing between a source application and a destination application separately.

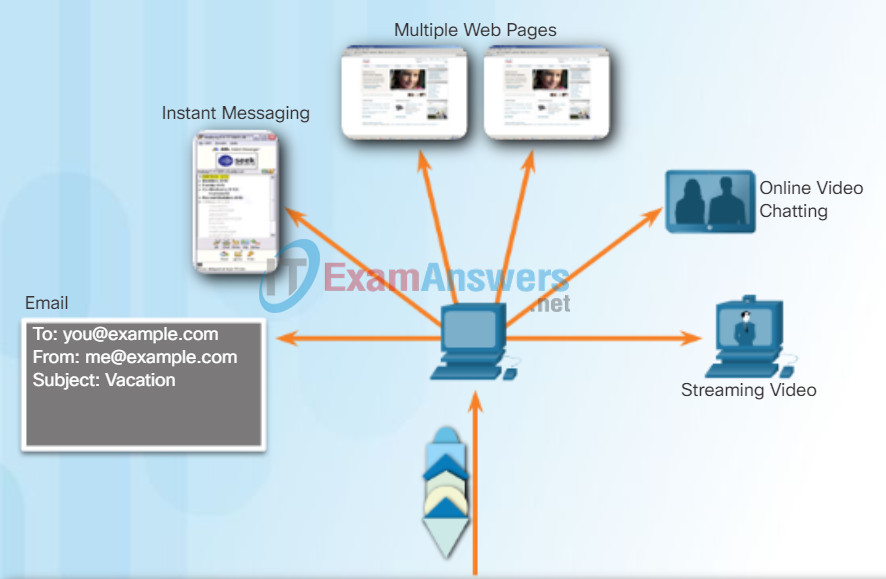

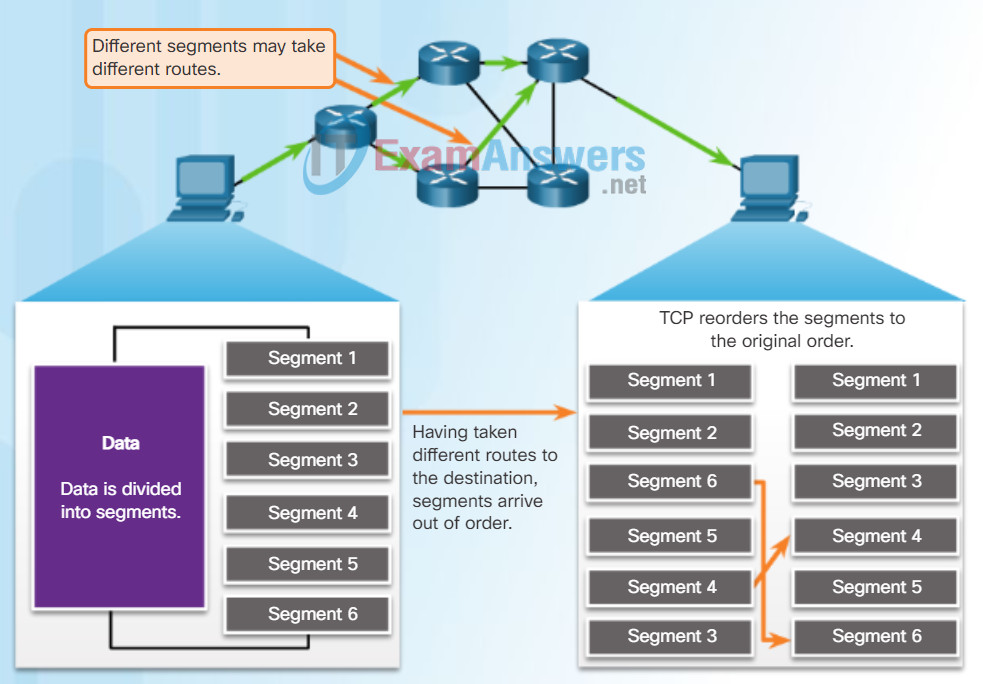

Segmenting Data and Reassembling Segments

Data must be prepared to be sent across the media in manageable pieces. Most networks have a limitation on the amount of data that can be included in a single packet. Transport layer protocols have services that segment the application data into blocks that are an appropriate size (Figure 2). This service includes the encapsulation required on each piece of data. A header, used for reassembly, is added to each block of data. This header is used to track the data stream.

At the destination, the transport layer must be able to reconstruct the pieces of data into a complete data stream that is useful to the application layer. The protocols at the transport layer describe how the transport layer header information is used to reassemble the data pieces into streams to be passed to the application layer.

Segmentation

The transport layer divides the data into segments that are easier to manage and transport.

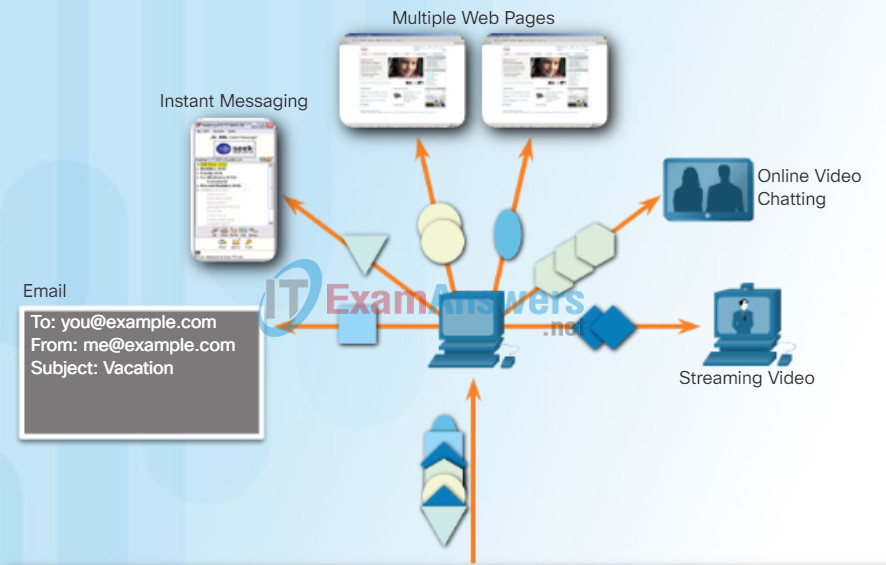

Identifying the Applications

To pass data streams to the proper applications, the transport layer must identify the target application (Figure 3). To accomplish this, the transport layer assigns each application an identifier called a port number. Each software process that needs to access the network is assigned a port number unique to that host.

Identifying the Application

The transport layer ensures that even with multiple applications running on a device, all applications receive the correct data.

9.1.1.3 Advanced ASA Firewall Feature

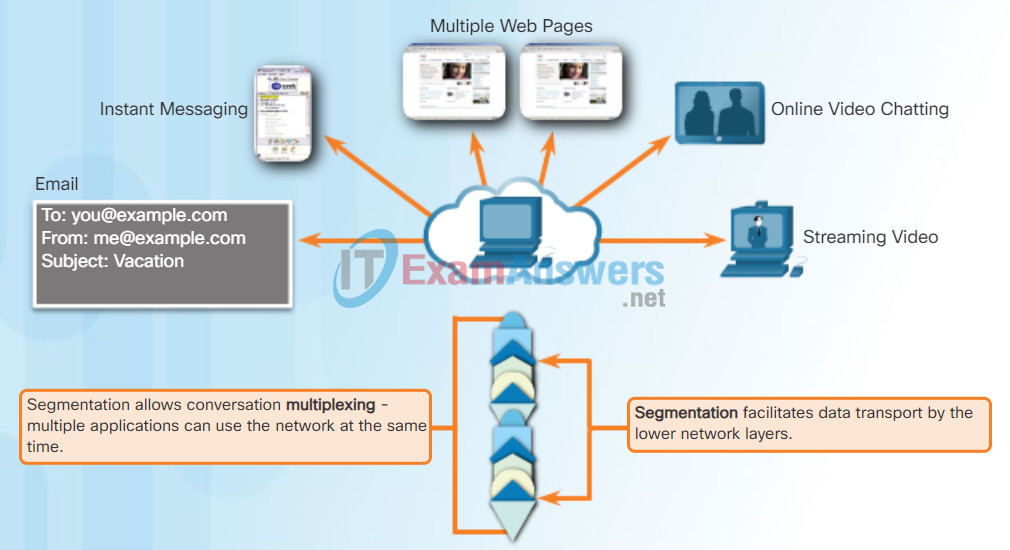

Conversation Multiplexing

Sending some types of data (for example, a streaming video) across a network, as one complete communication stream, can consume all of the available bandwidth. This will then prevent other communications from occurring at the same time. It would also make error recovery and retransmission of damaged data difficult.

The figure shows that segmenting the data into smaller chunks enables many different communications, from many different users, to be interleaved (multiplexed) on the same network.

To identify each segment of data, the transport layer adds a header containing binary data organized into several fields. It is the values in these fields that enable various transport layer protocols to perform different functions in managing data communication.

Transport Layer Services

Error checking can be performed on the data in the segment to check if the segment was changed during transmission.

9.1.1.4 Review of Firewalls in Network Design

Transport Layer Reliability

The transport layer is also responsible for managing reliability requirements of a conversation. Different applications have different transport reliability requirements.

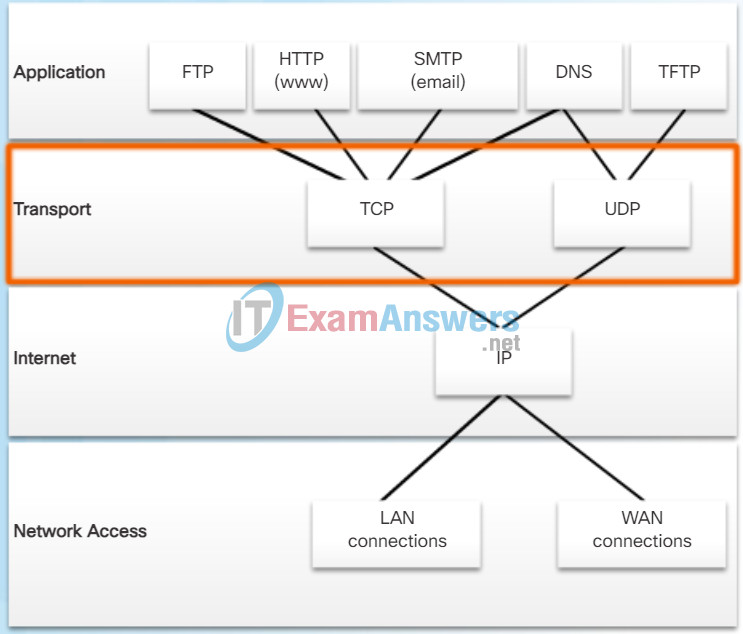

IP is concerned only with the structure, addressing, and routing of packets. IP does not specify how the delivery or transportation of the packets takes place. Transport protocols specify how to transfer messages between hosts. TCP/IP provides two transport layer protocols, Transmission Control Protocol (TCP) and User Datagram Protocol (UDP), as shown in the figure. IP uses these transport protocols to enable hosts to communicate and transfer data.

TCP is considered a reliable, full-featured transport layer protocol, which ensures that all of the data arrives at the destination. However, this requires additional fields in the TCP header which increases the size of the packet and also increases delay. In contrast, UDP is a simpler transport layer protocol that does not provide for reliability. It therefore has fewer fields and is faster than TCP.

9.1.1.5 ASA Firewall Modes of Operation

TCP

TCP transport is analogous to sending packages that are tracked from source to destination. If a shipping order is broken up into several packages, a customer can check online to see the order of the delivery.

With TCP, there are three basic operations of reliability:

- Numbering and tracking data segments transmitted to a specific host from a specific application

- Acknowledging received data

- Retransmitting any unacknowledged data after a certain period of time

Click Play in the figure to see how TCP segments and acknowledgments are transmitted between sender and receiver.

9.1.1.6 ASA Licensing Requirements

UDP

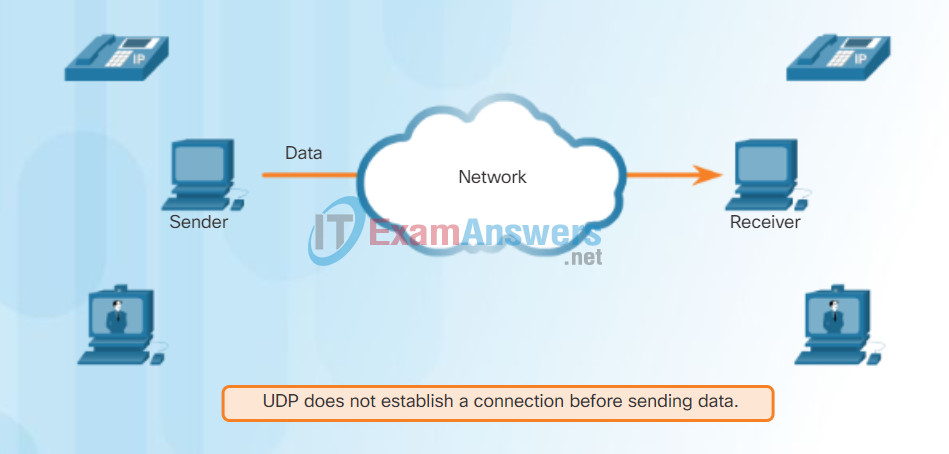

While the TCP reliability functions provide more robust communication between applications, they also incur additional overhead and possible delays in transmission. There is a trade-off between the value of reliability and the burden it places on network resources. Adding overhead to ensure reliability for some applications could reduce the usefulness of the application and can even be detrimental. In such cases, UDP is a better transport protocol.

UDP provides the basic functions for delivering data segments between the appropriate applications, with very little overhead and data checking. UDP is known as a best-effort delivery protocol. In the context of networking, best-effort delivery is referred to as unreliable because there is no acknowledgment that the data is received at the destination. With UDP, there are no transport layer processes that inform the sender of a successful delivery.

UDP is similar to placing a regular, non-registered, letter in the mail. The sender of the letter is not aware of the availability of the receiver to receive the letter. Nor is the post office responsible for tracking the letter or informing the sender if the letter does not arrive at the final destination.

Click Play in the figure to see an animation of UDP segments being transmitted from sender to receiver.

9.1.2 Basic ASA Configuration

9.1.2.1 Overview of ASA 5505

TCP Features

To understand the differences between TCP and UDP, it is important to understand how each protocol implements specific reliability features and how they track conversations. In addition to supporting the basic functions of data segmentation and reassembly, TCP, as shown in the figure, also provides other services.

Establishing a Session

TCP is a connection-oriented protocol. A connection-oriented protocol is one that negotiates and establishes a permanent connection (or session) between source and destination devices prior to forwarding any traffic. Through session establishment, the devices negotiate the amount of traffic that can be forwarded at a given time, and the communication data between the two can be closely managed.

Reliable Delivery

In networking terms, reliability means ensuring that each segment that the source sends arrives at the destination. For many reasons, it is possible for a segment to become corrupted or lost completely, as it is transmitted over the network.

Same-Order Delivery

Because networks may provide multiple routes that can have different transmission rates, data can arrive in the wrong order. By numbering and sequencing the segments, TCP can ensure that these segments are reassembled into the proper order.

Flow Control

Network hosts have limited resources, such as memory and processing power. When TCP is aware that these resources are overtaxed, it can request that the sending application reduce the rate of data flow. This is done by TCP regulating the amount of data the source transmits. Flow control can prevent the need for retransmission of the data when the receiving host’s resourses are overwhelmed.

For more information on TCP, read the RFC.

TCP Services

- Establishing a session ensures the application is ready to receive the data.

- Same order delivery ensures that the segments are reassembled into the proper order.

- Reliable delivery means lost segments are resent so the data is received complete.

- Flow control ensures that the receiver is able to process the data received.

9.1.2.2 ASA Security Levels

TCP is a stateful protocol. A stateful protocol is a protocol that keeps track of the state of the communication session. To track the state of a session, TCP records which information it has sent and which information has been acknowledged. The stateful session begins with the session establishment and ends when closed with the session termination.

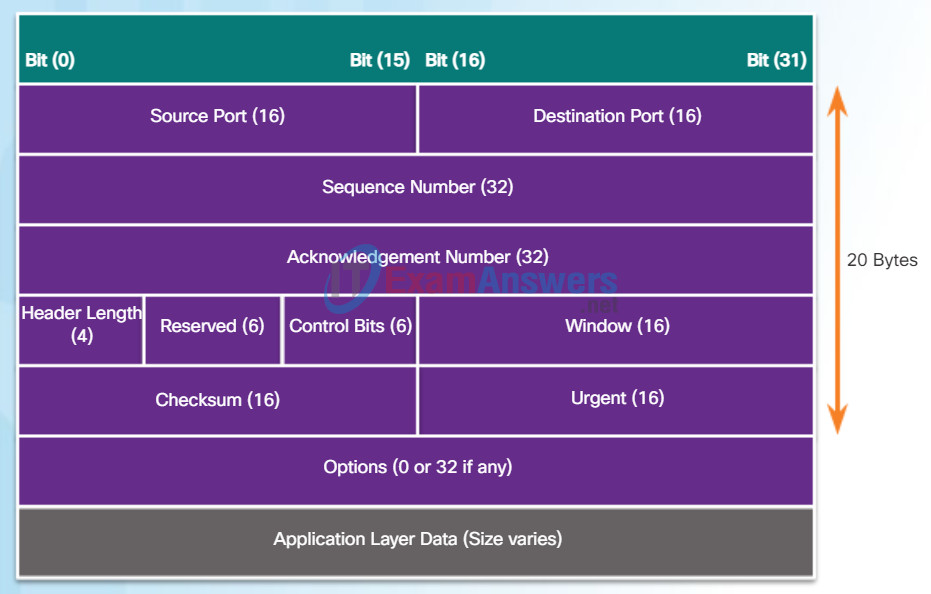

As shown in the figure, each TCP segment has 20 bytes of overhead in the header encapsulating the application layer data:

- Source Port (16 bits) and Destination Port (16 bits) – Used to identify the application.

- Sequence number (32 bits) – Used for data reassembly purposes.

- Acknowledgment number (32 bits) – Indicates the data that has been received.

- Header length (4 bits) – Known as ʺdata offsetʺ. Indicates the length of the TCP segment header.

- Reserved (6 bits) – This field is reserved for the future.

- Control bits (6 bits) – Includes bit codes, or flags, which indicate the purpose and function of the TCP segment.

- Window size (16 bits) – Indicates the number of bytes that can be accepted at one time.

- Checksum (16 bits) – Used for error checking of the segment header and data.

- Urgent (16 bits) – Indicates if data is urgent.

TCP Segment

9.1.2.3 ASA 5505 Deployment Scenarios

User Datagram Protocol (UDP) is considered a best-effort transport protocol. UDP is a lightweight transport protocol that offers the same data segmentation and reassembly as TCP, but without TCP reliability and flow control. UDP is such a simple protocol that it is usually described in terms of what it does not do compared to TCP.

The features of UDP are described in the figure.

For more information on UDP, read the RFC.

UDP

Features of UDP

- Data is reconstructed in the order that it is received.

- Any segments lost are not resent.

- No session establishment.

- Does not inform the sender about resource availability.

9.2 ASA Firewall Configuration

9.2.1 The ASA Firewall Configuration

9.2.1.1 Basic ASA Settings

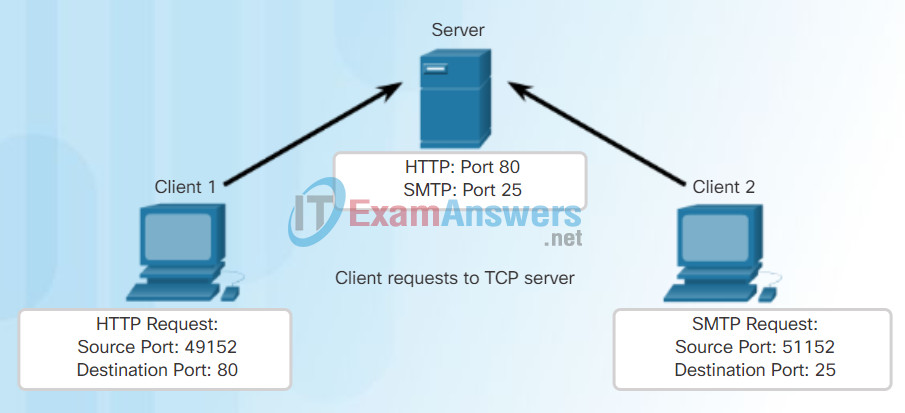

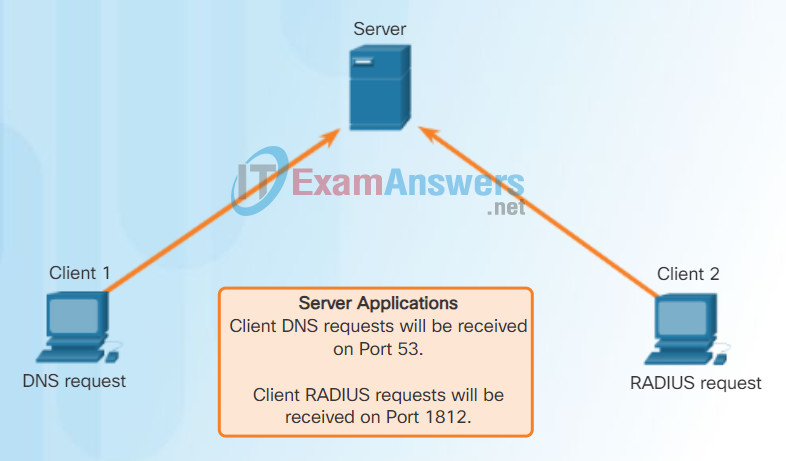

Each application process running on the server is configured to use a port number, either by default or manually, by a system administrator. An individual server cannot have two services assigned to the same port number within the same transport layer services.

For example, a host running a web server application and a file transfer application cannot have both configured to use the same port (for example, TCP port 80). An active server application assigned to a specific port is considered to be open, which means that the transport layer accepts and processes segments addressed to that port. Any incoming client request addressed to the correct socket is accepted, and the data is passed to the server application. There can be many ports open simultaneously on a server, one for each active server application.

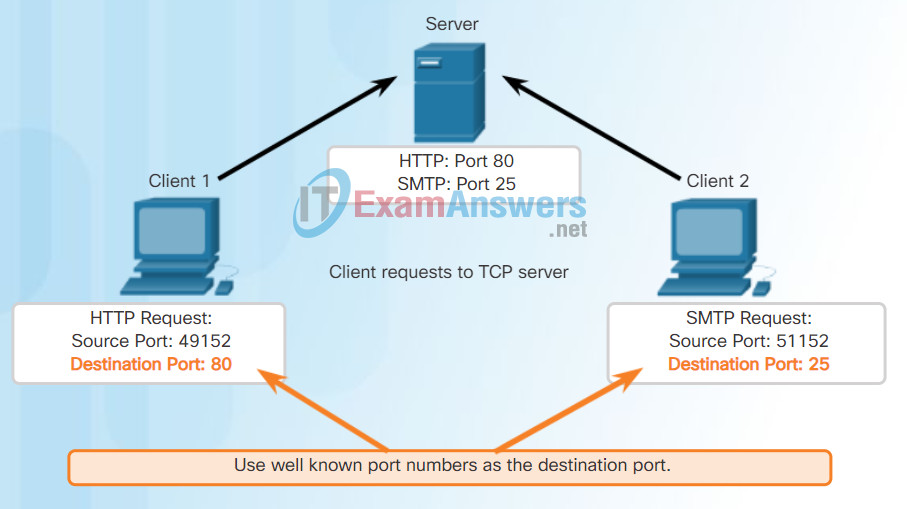

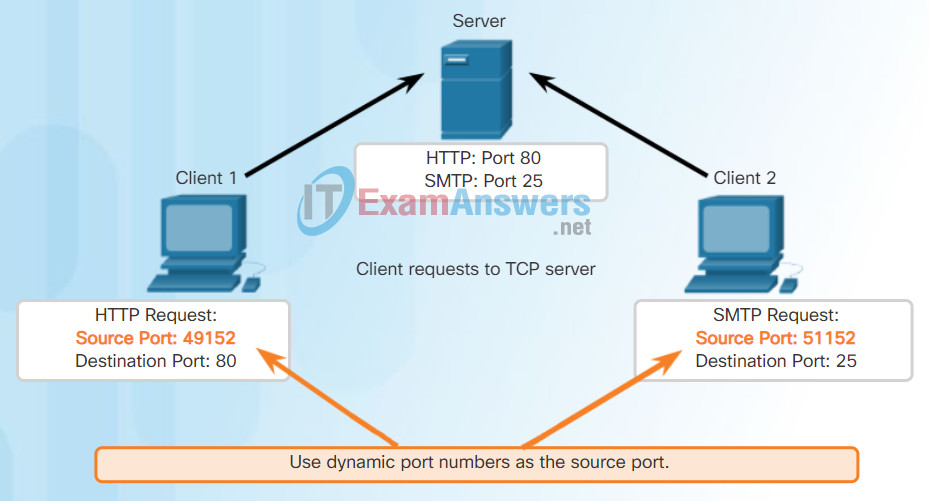

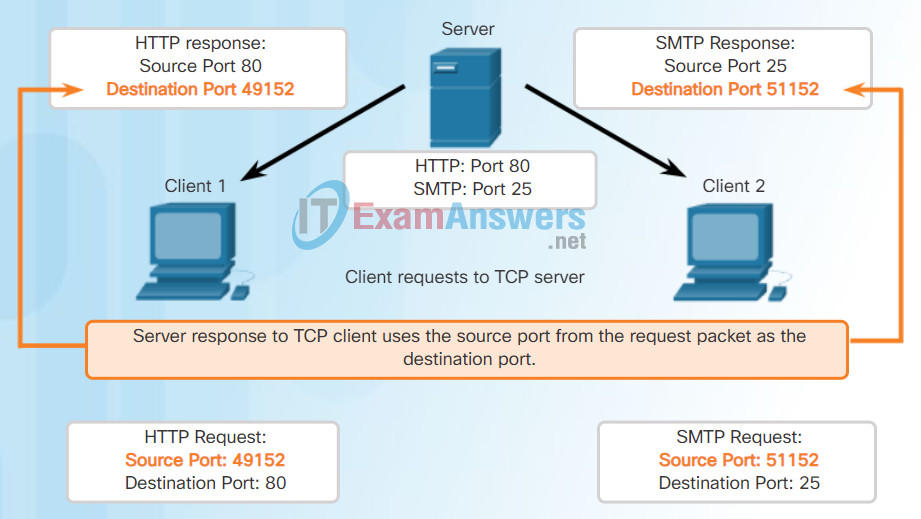

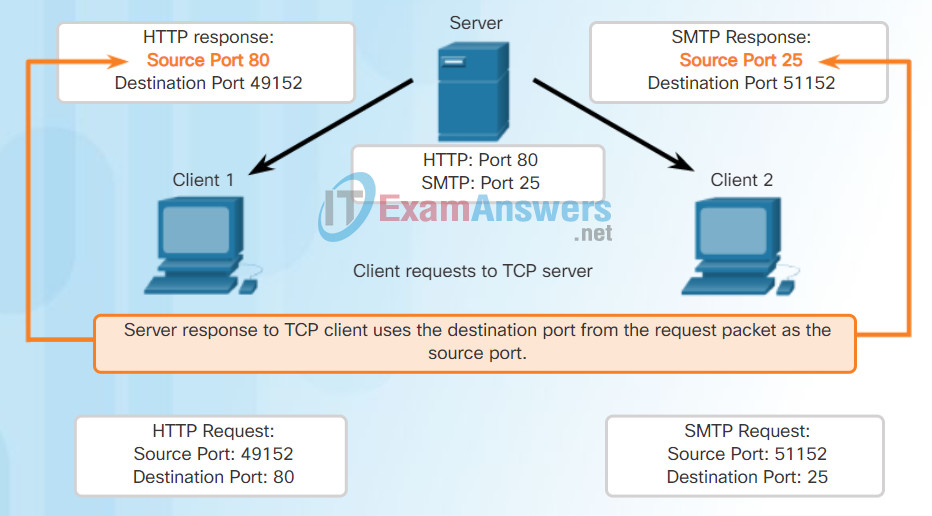

Refer to Figures 1 through 5 to see the typical allocation of source and destination ports in TCP client/server operations.

Clients Sending TCP Requests

Request Destination Ports

Request Source Ports

Response Destination Ports

Response Source Ports

9.2.1.2 ASA Default Configuratio

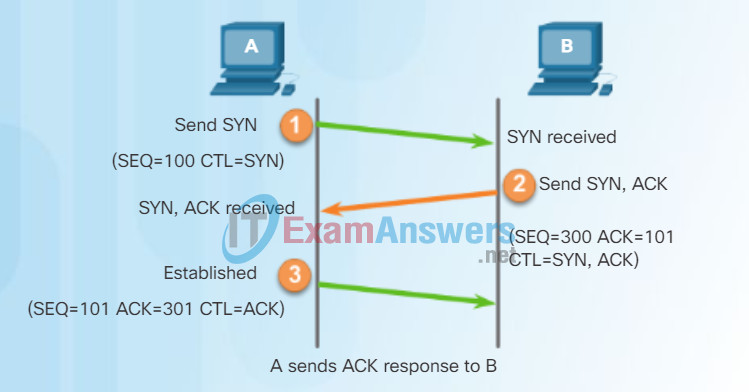

TCP Connection Establishment

In some cultures, when two persons meet, they often greet each other by shaking hands. The act of shaking hands is understood by both parties as a signal for a friendly greeting. Connections on the network are similar. In TCP connections, the host client establishes the connection with the server.

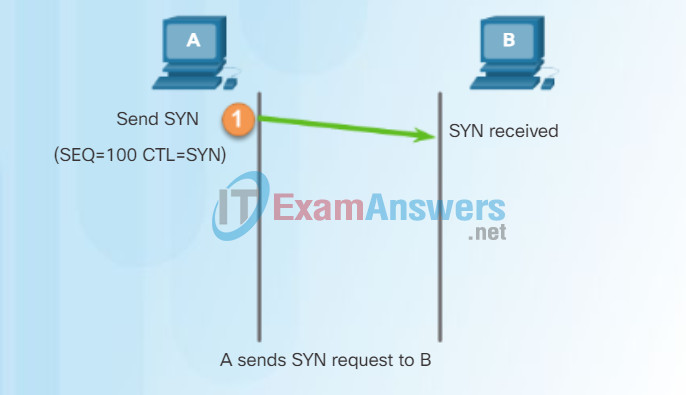

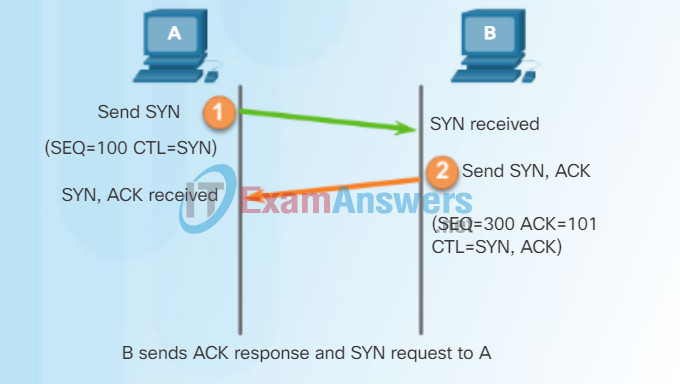

A TCP connection is established in three steps:

Step 1 – The initiating client requests a client-to-server communication session with the server.

Step 2 – The server acknowledges the client-to-server communication session and requests a server-to-client communication session.

Step 3 – The initiating client acknowledges the server-to-client communication session.

In the figure, click buttons 1 through 3 to see the TCP connection establishment.

9.2.1.3 ASA Interactive Setup Initialization Wizard

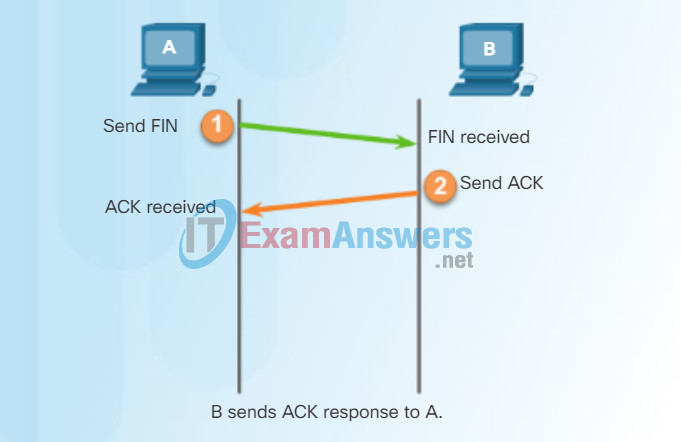

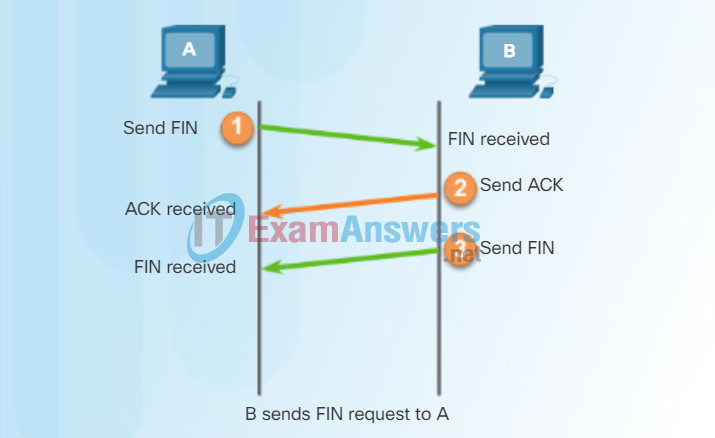

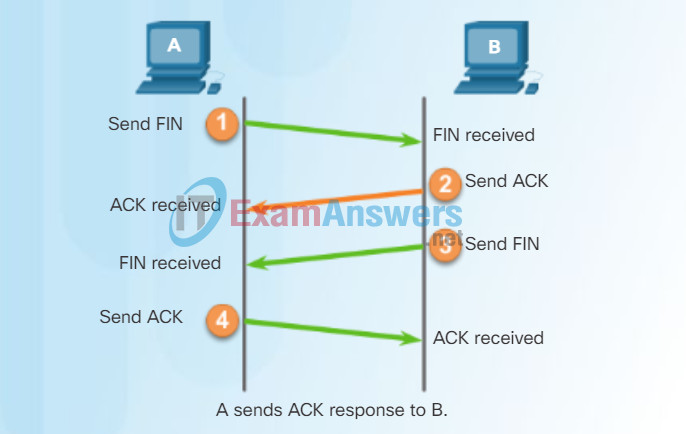

TCP Session Termination

To close a connection, the Finish (FIN) control flag must be set in the segment header. To end each one-way TCP session, a two-way handshake, consisting of a FIN segment and an Acknowledgment (ACK) segment, is used. Therefore, to terminate a single conversation supported by TCP, four exchanges are needed to end both sessions.

In the figure, click buttons 1 through 4 to see the TCP connection termination.

Note: In this explanation, the terms client and server are used as a reference for simplicity, but the termination process can be initiated by any two hosts that have an open session:

Step 1 – When the client has no more data to send in the stream, it sends a segment with the FIN flag set.

Step 2 – The server sends an ACK to acknowledge the receipt of the FIN to terminate the session from client to server.

Step 3 – The server sends a FIN to the client to terminate the server-to-client session.

Step 4 – The client responds with an ACK to acknowledge the FIN from the server.

When all segments have been acknowledged, the session is closed.

9.2.2 Configuring Management Settings and Services

9.2.2.1 Enter Global Configuration Mode

TCP Reliability – Ordered Delivery

TCP segments may arrive at their destination out of order. For the original message to be understood by the recipient, the data in these segments is reassembled into the original order. Sequence numbers are assigned in the header of each packet to achieve this goal. The sequence number represents the first data byte of the TCP segment.

During session setup, an initial sequence number (ISN) is set. This ISN represents the starting value of the bytes for this session that is transmitted to the receiving application. As data is transmitted during the session, the sequence number is incremented by the number of bytes that have been transmitted. This data byte tracking enables each segment to be uniquely identified and acknowledged. Missing segments can then be identified.

Note: The ISN does not begin at one but is effectively a random number. This is to prevent certain types of malicious attacks. For simplicity, we will use an ISN of 1 for the examples in this chapter.

Segment sequence numbers indicate how to reassemble and reorder received segments, as shown in the figure.

The receiving TCP process places the data from a segment into a receiving buffer. Segments are placed in the proper sequence order and passed to the application layer when reassembled. Any segments that arrive with sequence numbers that are out of order are held for later processing. Then, when the segments with the missing bytes arrive, these segments are processed in order.

TCP Segments Are Reordered at the Destination

9.2.2.2 Configuring Basic Settings

Video Demonstration – Sequence Numbers and Acknowledgments

One of the functions of TCP is ensuring that each segment reaches its destination. The TCP services on the destination host acknowledge the data that it has received by the source application.

9.2.2.3 Configuring Logical VLAN Interfaces

9.2.2.4 Assigning Layer 2 Ports to VLANs

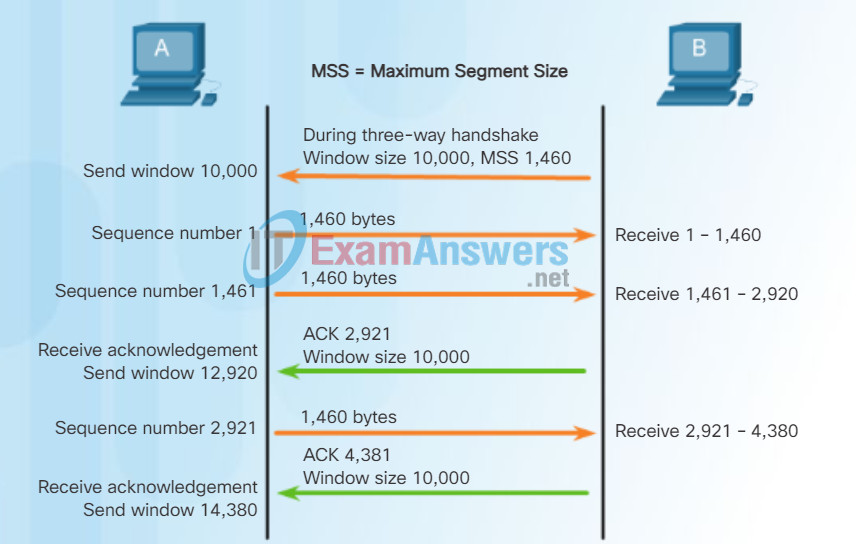

TCP also provides mechanisms for flow control, the amount of data that the destination can receive and process reliably. Flow control helps maintain the reliability of TCP transmission by adjusting the rate of data flow between source and destination for a given session. To accomplish this, the TCP header includes a 16-bit field called the window size.

The figure shows an example of window size and acknowledgments. The window size is the number of bytes that the destination device of a TCP session can accept and process at one time. In this example, PC B’s initial window size for the TCP session is 10,000 bytes. Starting with the first byte, byte number 1, the last byte PC A can send without receiving an acknowledgment is byte 10,000. This is known as PC A’s send window. The window size is included in every TCP segment so the destination can modify the window size at any time depending on buffer availability.

Note: In the figure, the source is transmitting 1,460 bytes of data within each TCP segment. This is known as the MSS (Maximum Segment Size).

The initial window size is agreed upon when the TCP session is established during the three-way handshake. The source device must limit the number of bytes sent to the destination device based on the destination’s window size. Only after the source device receives an acknowledgment that the bytes have been received, can it continue sending more data for the session. Typically, the destination will not wait for all the bytes for its window size to be received before replying with an acknowledgment. As the bytes are received and processed, the destination will send acknowledgments to inform the source that it can continue to send additional bytes.

Typically, PC B will not wait until all 10,000 bytes have been received before sending an acknowledgment. This means PC A can adjust its send window as it receives acknowledgments from PC B. As shown in the figure, when PC A receives an acknowledgment with the acknowledgment number 2,921, PC A’s send window will increment another 10,000 bytes (the size of PC B’s current window size) to 12,920. PC A can now continue to send up to another 10,000 bytes to PC B as long as it does not send past its new send window at 12,920.

The process of the destination sending acknowledgments as it processes bytes received and the continual adjustment of the source’s send window is known as sliding windows.

If the availability of the destination’s buffer space decreases, it may reduce its window size to inform the source to reduce the number of bytes it should send without receiving an acknowledgment.

Note: Devices typically use the sliding windows protocol. With sliding windows, the receiver does not wait for the number of bytes in its window size to be reached before sending an acknowledgment. The receiver typically sends an acknowledgement after every two segments it receives. The number of segments received before being acknowledged may vary. The advantage of sliding windows is that it allows the sender to continuously transmit segments, as long as the receiver is acknowledging previous segments. The details of sliding windows are beyond the scope of this course.

TCP Window Size Example

The window size determines the number of bytes that can be sent before expecting an acknowledgment.

The acknowledgement number is the number of the next expected byte.

9.2.2.5 Configuring a Default Static Route

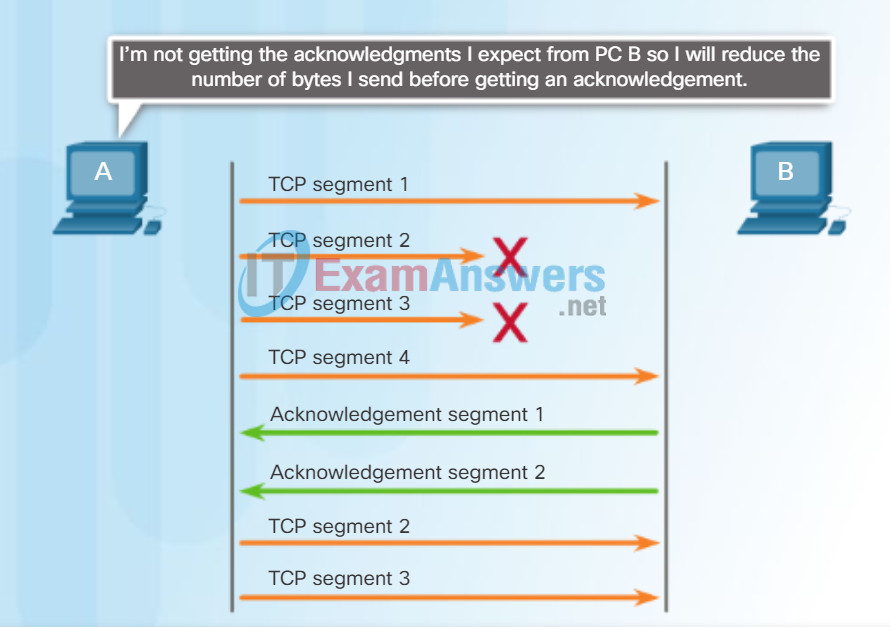

TCP Flow Control – Congestion Avoidance

When congestion occurs on a network, it results in packets being discarded by the overloaded router. When packets containing TCP segments don’t reach their destination, they are left unacknowledged. By determining the rate at which TCP segments are sent but not acknowledged, the source can assume a certain level of network congestion.

Whenever there is congestion, retransmission of lost TCP segments from the source will occur. If the retransmission is not properly controlled, the additional retransmission of the TCP segments can make the congestion even worse. Not only are new packets with TCP segments introduced into the network, but the feedback effect of the retransmitted TCP segments that were lost will also add to the congestion. To avoid and control congestion, TCP employs several congestion handling mechanisms, timers, and algorithms.

If the source determines that the TCP segments are either not being acknowledged or not acknowledged in a timely manner, then it can reduce the number of bytes it sends before receiving an acknowledgment. Notice that it is the source that is reducing the number of unacknowledged bytes it sends and not the window size determined by the destination.

Note: Explanation of actual congestion handling mechanisms, timers, and algorithms are beyond the scope of this course.

TCP Congestion Control

Acknowledgement numbers are for the next expected byte and not for a segment. The segment numbers used are simplified for illustration purposes.

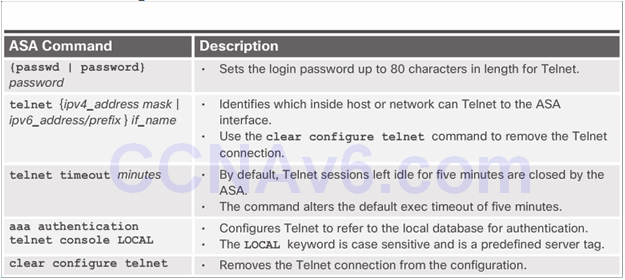

9.2.2.6 Configuring Remote Access Services

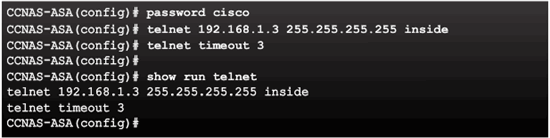

Telnet Configuration Commands

Telnet Configuration Commands Example

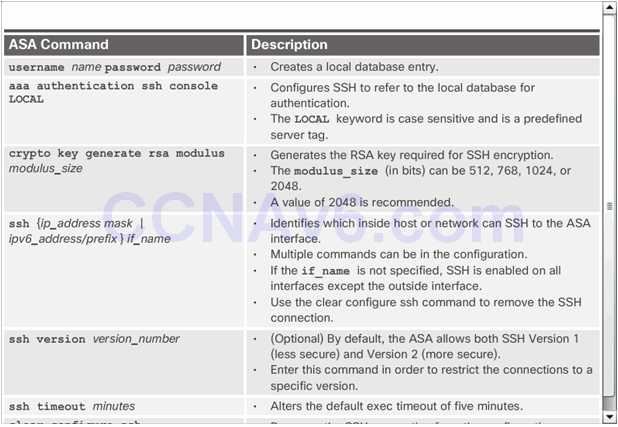

SSH Configuration Commands

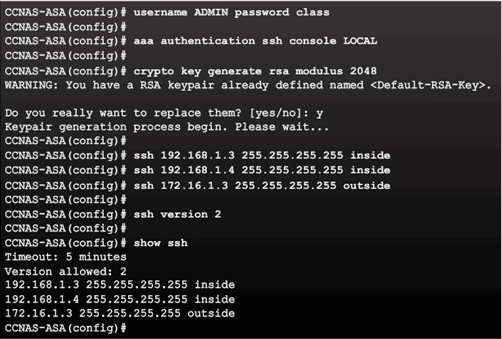

Configuring SSH Access Example

9.2.2.7 Configuring Network Time Protocol Services

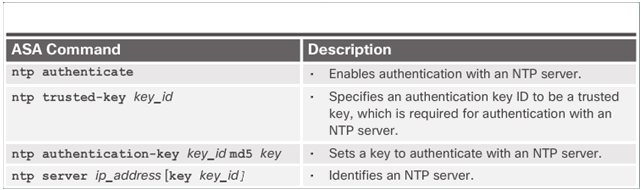

NTP Authentication Commands

Configuring NTP Example

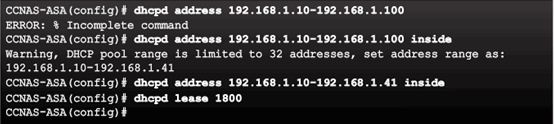

9.2.2.8 Configuring DHCP Services

9.2.3 Object Groups

9.2.3.1 Introduction to Objects and Object Groups

UDP is a simple protocol that provides the basic transport layer functions. It has much lower overhead than TCP because it is not connection-oriented and does not offer the sophisticated retransmission, sequencing, and flow control mechanisms that provide reliability.

This does not mean that applications that use UDP are always unreliable, nor does it mean that UDP is an inferior protocol. It simply means that these functions are not provided by the transport layer protocol and must be implemented elsewhere if required.

The low overhead of UDP makes it very desirable for protocols that make simple request and reply transactions. For example, using TCP for DHCP would introduce unnecessary network traffic. If there is a problem with a request or a reply, the device simply sends the request again if no response is received.

UDP Low Overhead Data Transport

UDP provides low overhead data transport because it has a small datagram header and no network management traffic.

9.2.3.2 Configuring Network Objects

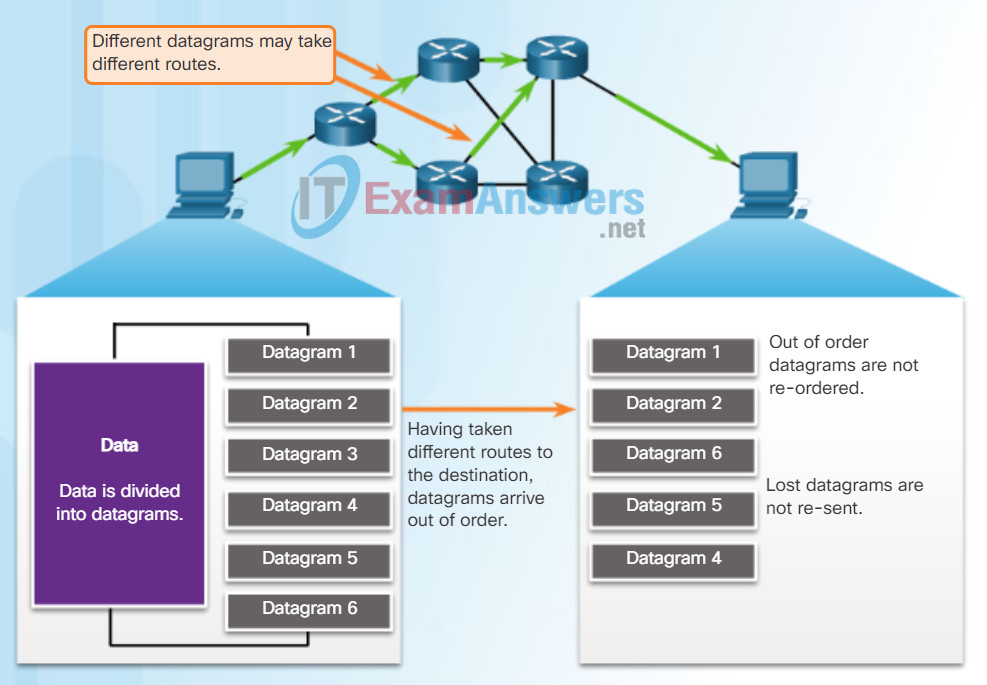

Like segments with TCP, when UDP datagrams are sent to a destination, they often take different paths and arrive in the wrong order. UDP does not track sequence numbers the way TCP does. UDP has no way to reorder the datagrams into their transmission order, as shown in the figure.

Therefore, UDP simply reassembles the data in the order that it was received and forwards it to the application. If the data sequence is important to the application, the application must identify the proper sequence and determine how the data should be processed.

UDP: Connectionless and Unreliable

9.2.3.3 Configuring Service Objects

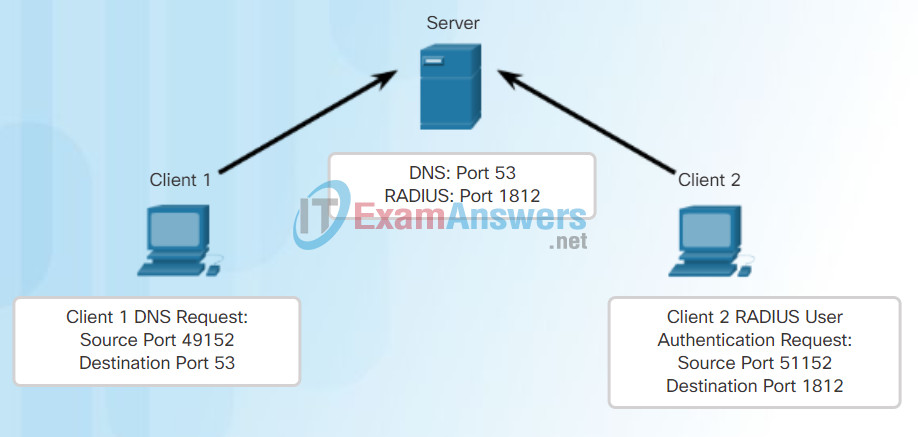

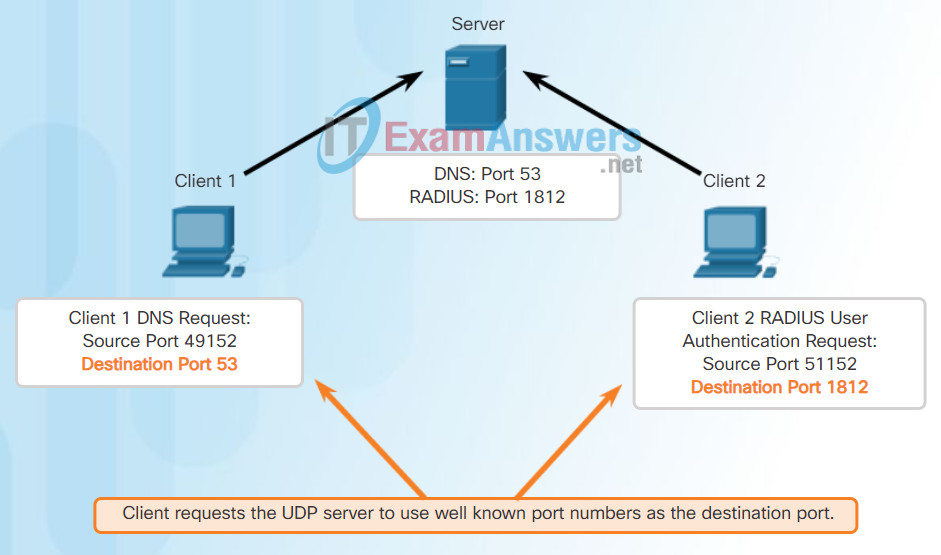

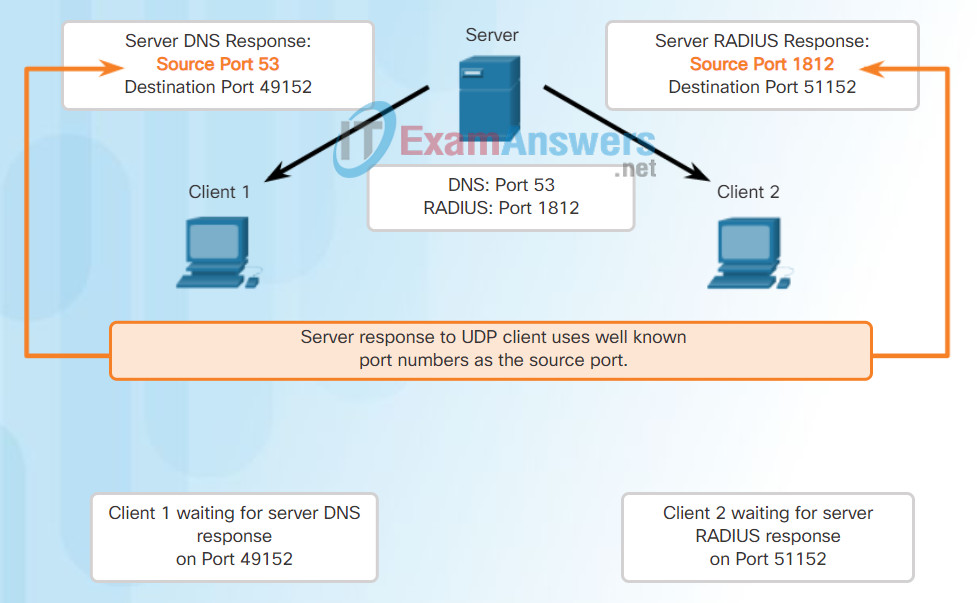

Like TCP-based applications, UDP-based server applications are assigned well-known or registered port numbers, as shown in the figure. When these applications or processes are running on a server, they accept the data matched with the assigned port number. When UDP receives a datagram destined for one of these ports, it forwards the application data to the appropriate application based on its port number.

Note: The Remote Authentication Dial-in User Service (RADIUS) server shown in the figure provides authentication, authorization, and accounting services to manage user access. The operation of RADIUS is beyond scope for this course.

UDP Server Listening for Requests

Client requests to servers have well known port numbers as the destination port.

9.2.3.4 Object Groups

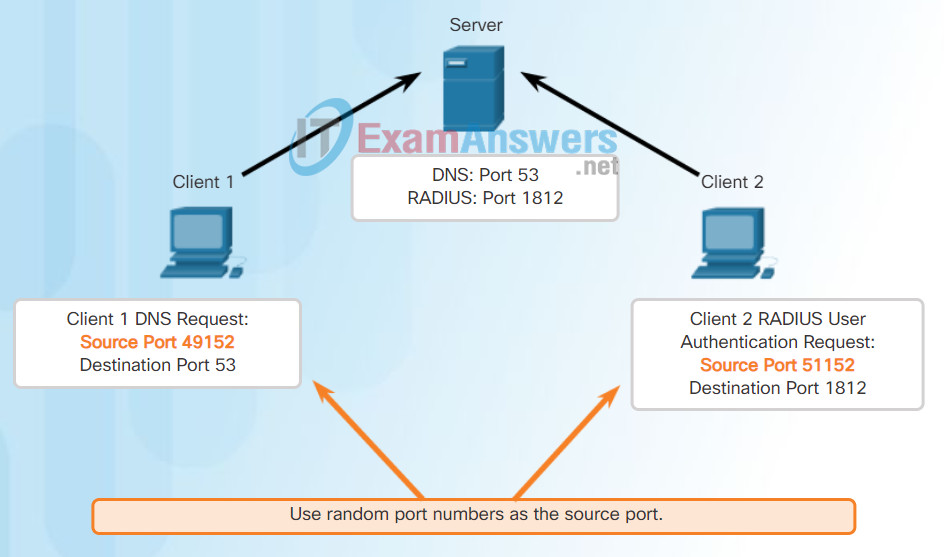

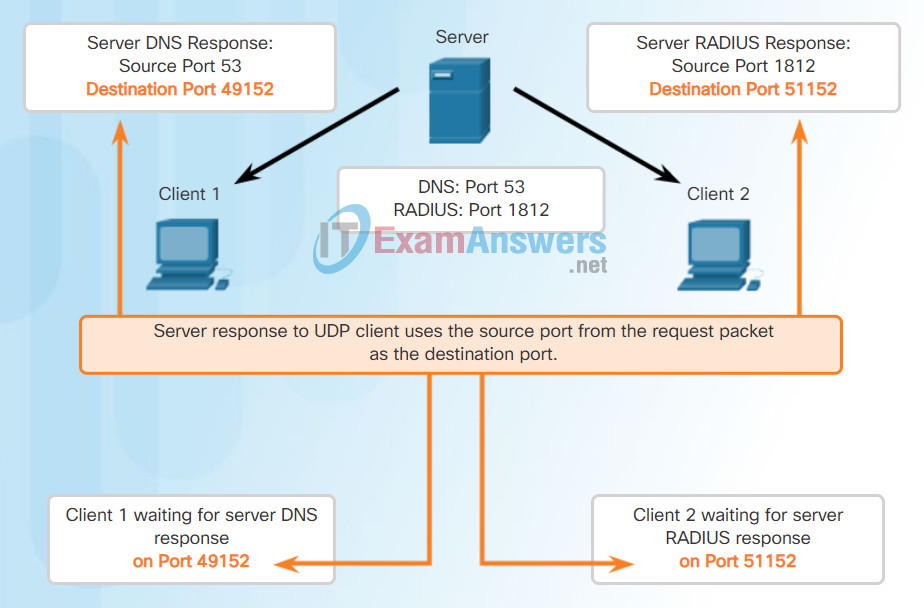

As with TCP, client-server communication is initiated by a client application that requests data from a server process. The UDP client process dynamically selects a port number from the range of port numbers and uses this as the source port for the conversation. The destination port is usually the well-known or registered port number assigned to the server process.

After a client has selected the source and destination ports, the same pair of ports is used in the header of all datagrams used in the transaction. For the data returning to the client from the server, the source and destination port numbers in the datagram header are reversed.

Click through Figures 1 through 5 to see details of the UDP client processes.

Clients Sending UDP Requests

Request Destination Ports

Request Source Ports

Response Destination Ports

Response Source Ports

9.2.3.5 Configuring Common Object Groups

Lab – Using Wireshark to Examine a UDP DNS Capture

In this lab, you will complete the following objectives:

- Part 1: Record a PC’s IP Configuration Information

- Part 2: Use Wireshark to Capture DNS Queries and Responses

- Part 3: Analyze Captured DNS or UDP Packets

9.2.3.6 Activity – Identify Types of Object Groups

9.2.4 ACLs

9.2.4.1 ASA ACLs

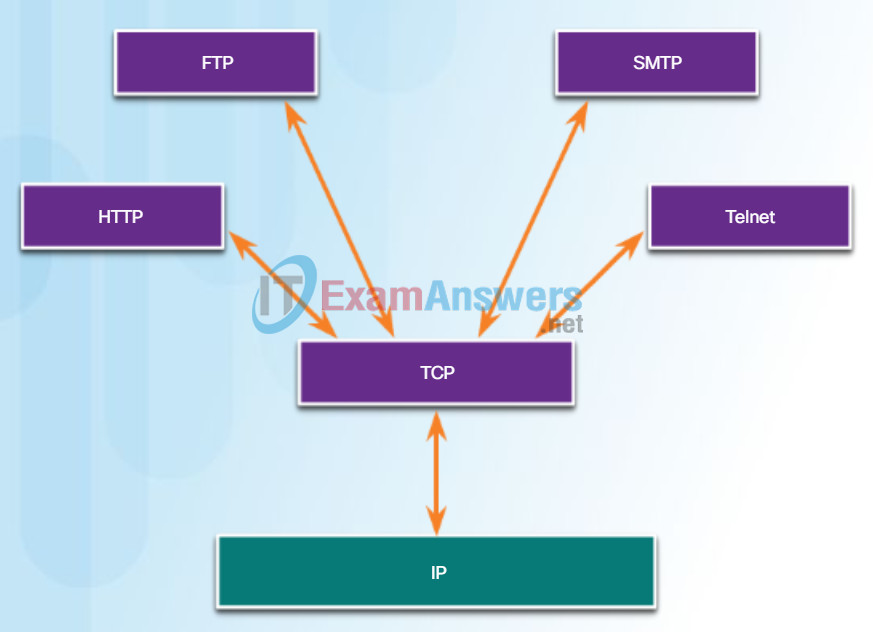

TCP is a great example of how the different layers of the TCP/IP protocol suite have specific roles. TCP handles all tasks associated with dividing the data stream into segments, providing reliability, controlling data flow, and the reordering of segments. TCP frees the application from having to manage any of these tasks. Applications, like those shown in the figure, can simply send the data stream to the transport layer and use the services of TCP.

Applications that use TCP

9.2.4.2 Types of ASA ACL Filtering

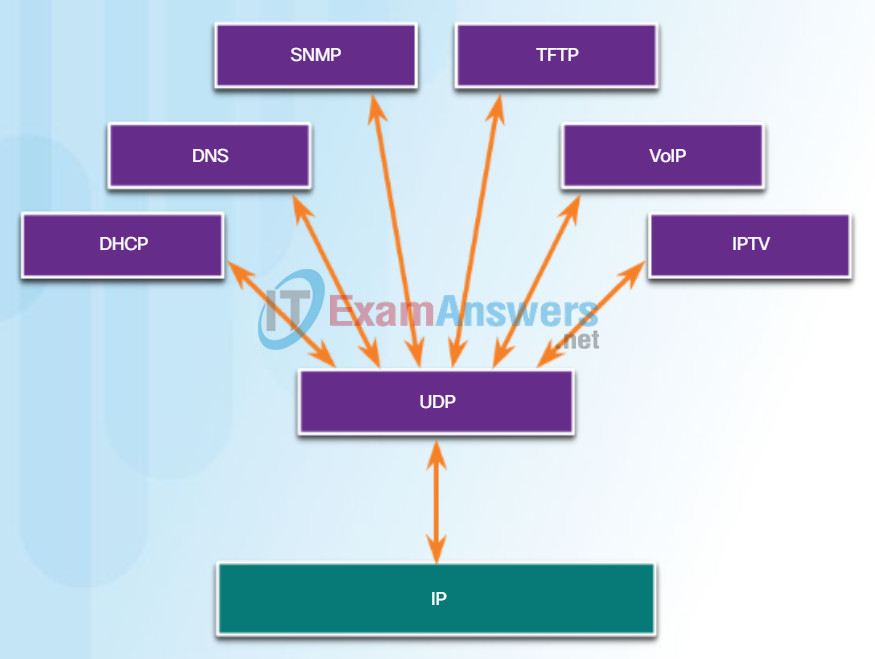

There are three types of applications that are best suited for UDP:

- Live video and multimedia applications – Can tolerate some data loss, but require little or no delay. Examples include VoIP and live streaming video.

- Simple request and reply applications – Applications with simple transactions where a host sends a request and may or may not receive a reply. Examples include DNS and DHCP.

- Applications that handle reliability themselves – Unidirectional communications where flow control, error detection, acknowledgments, and error recovery is not required or can be handled by the application. Examples include SNMP and TFTP.

Although DNS and SNMP use UDP by default, both can also use TCP. DNS will use TCP if the DNS request or DNS response is more than 512 bytes, such as when a DNS response includes a large number of name resolutions. Similarly, under some situations the network administrator may want to configure SNMP to use TCP.

Applications that use UDP

9.2.4.3 Types of ASA ACLs

Lab – Using Wireshark to Examine FTP and TFTP Captures

In this lab, you will complete the following objectives:

- Part 1: Identify TCP Header Fields and Operation Using a Wireshark FTP Session Capture

- Part 2: Identify UDP Header Fields and Operation Using a Wireshark TFTP Session Capture

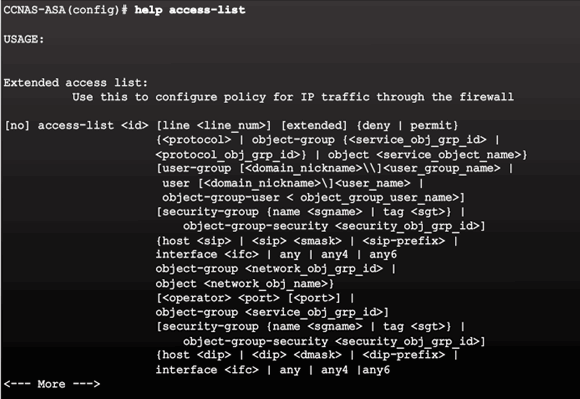

9.2.4.4 Configuring ACLs

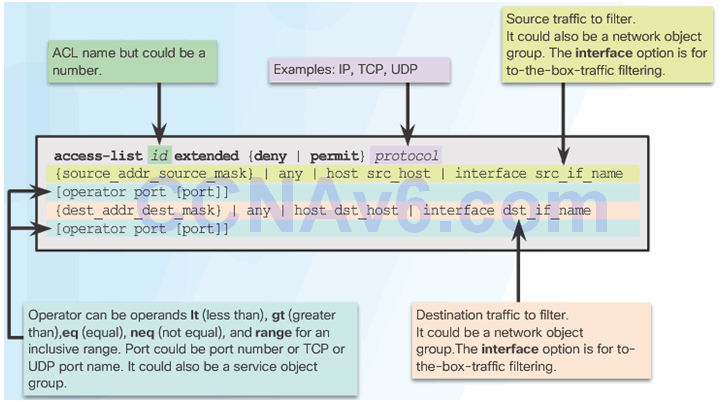

ACL Command Parameters

Condensed Extended ACL Syntax

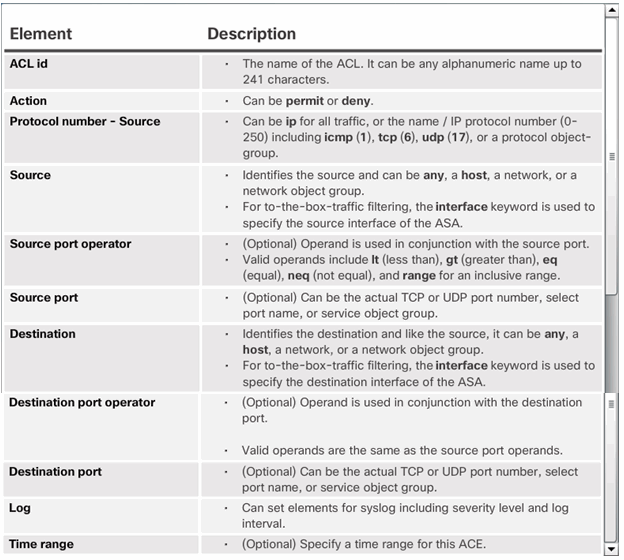

ASA ACL Elements

9.2.4.5 Applying ACLs

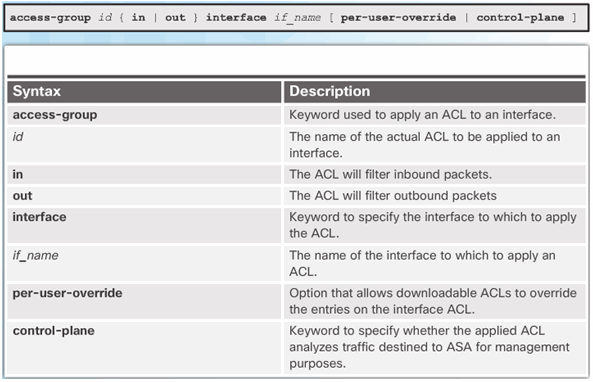

access-group Command Syntax

9.2.4.6 ACLs and Object Groups

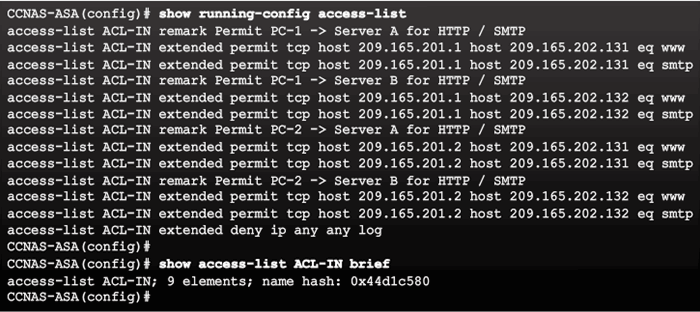

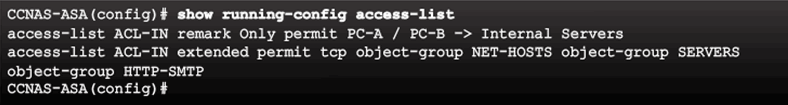

ACL Reference Topology

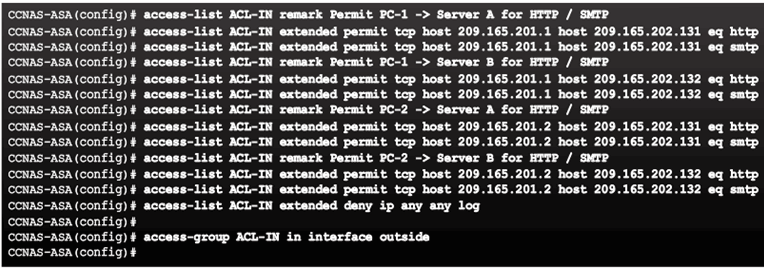

Extended ACL Configuration Example

Verifying the ACL

9.2.4.7 ACL Using Object Groups Examples

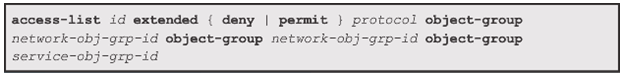

Condensed Extended ACL Syntax with Object Groups

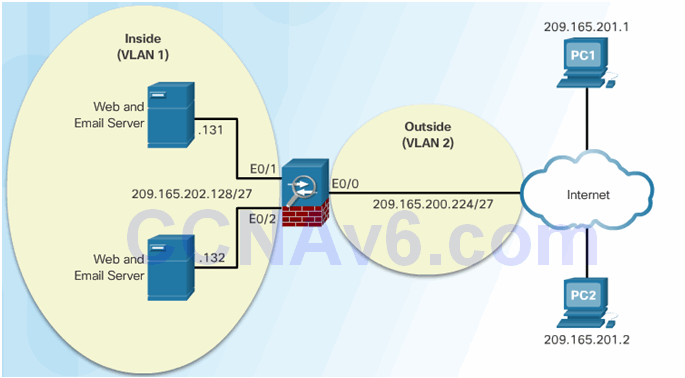

ACL Reference Topology

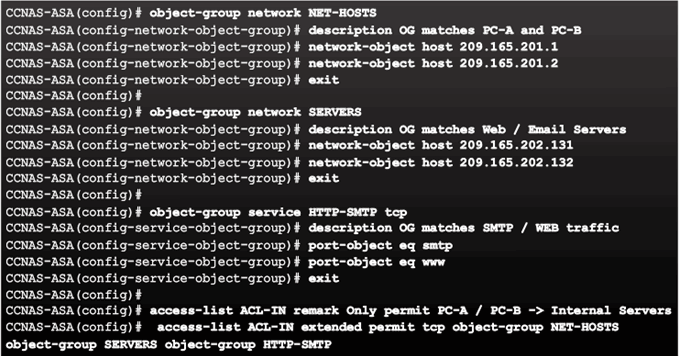

ACL and Object Group Configuration Example

Verifying the ACL and Object Group Configuration Example

9.2.5 NAT Services on an ASA

9.2.5.1 ASA NAT Overview

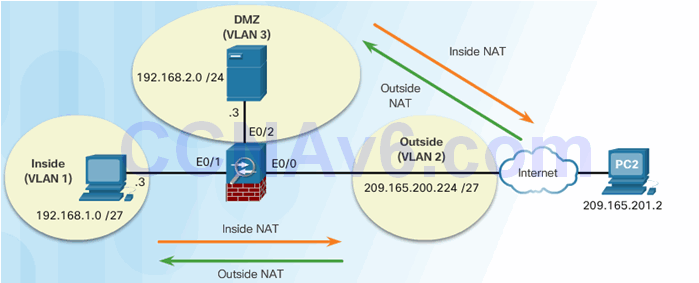

Types of NAT Deployments:

- Inside NAT

- Outside NAT

- Bidirectional NAT

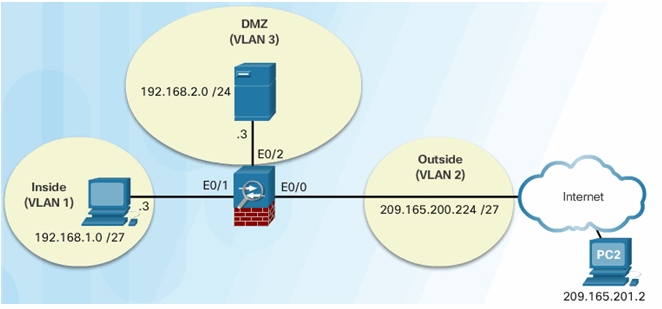

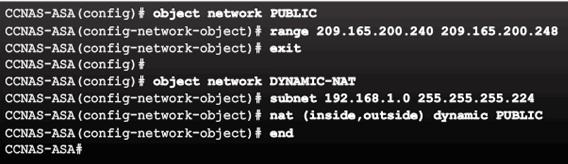

9.2.5.2 Configuring Dynamic NAT

Dynamic NAT Reference Topology

Dynamic NAT Configuration Example

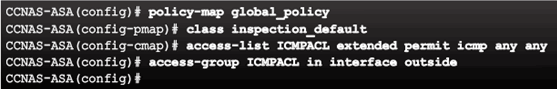

Enable Return Traffic Example

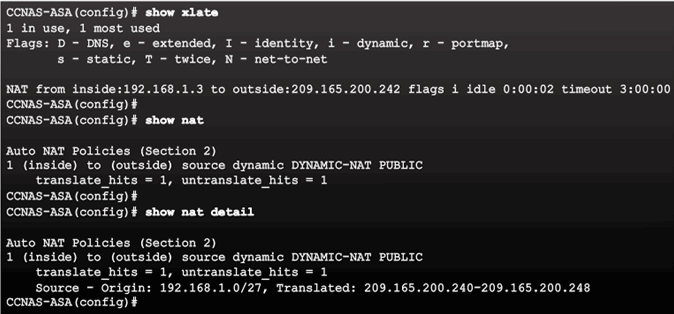

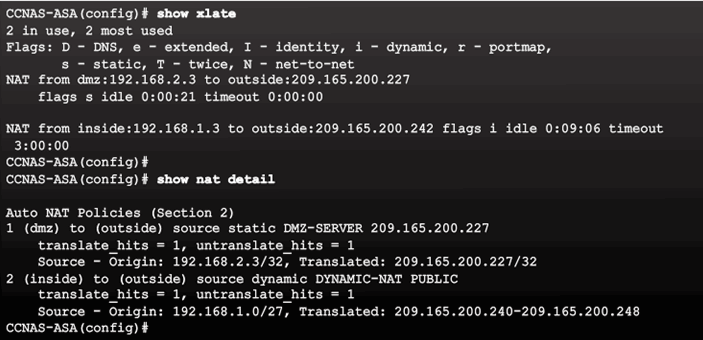

Verifying the Dynamic NAT Configuration Example

9.2.5.3 Configuring Dynamic PAT

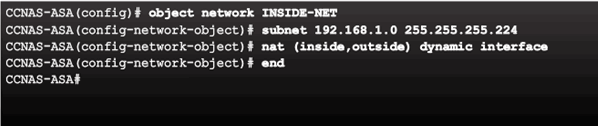

Dynamic PAT Configuration Example

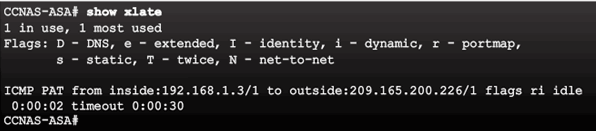

Verifying the Dynamic PAT Configuration Example

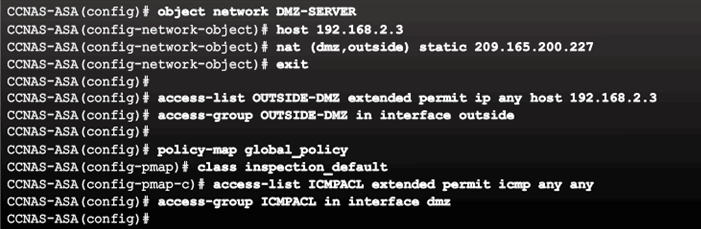

9.2.5.4 Configuring Static NAT

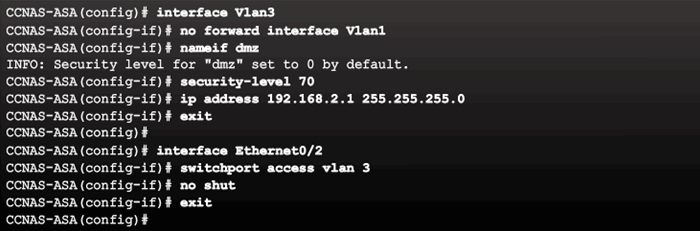

Configure the DMZ Interface Example

Static NAT Configuration Example

Verifying the Static NAT Configuration Example

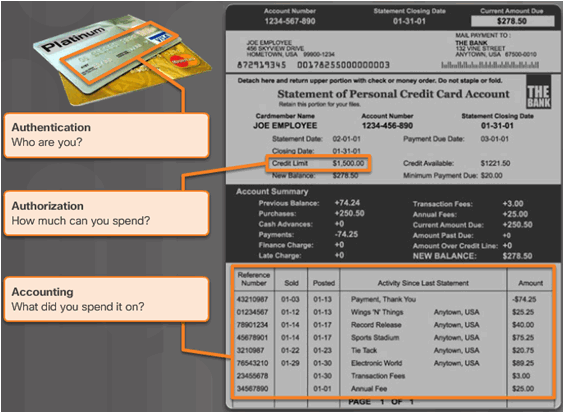

9.2.6 AAA

9.2.6.1 AAA Review

9.2.6.2 Local Database and Servers

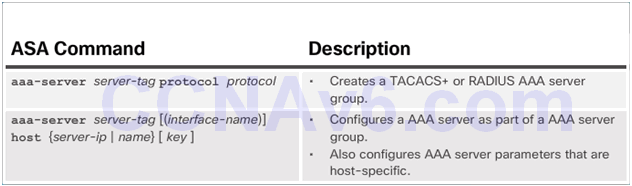

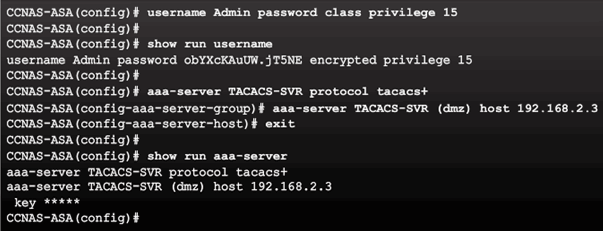

RADIUS and TACACS+ Server Commands

Sample AAA TACACS+ Server Configuration

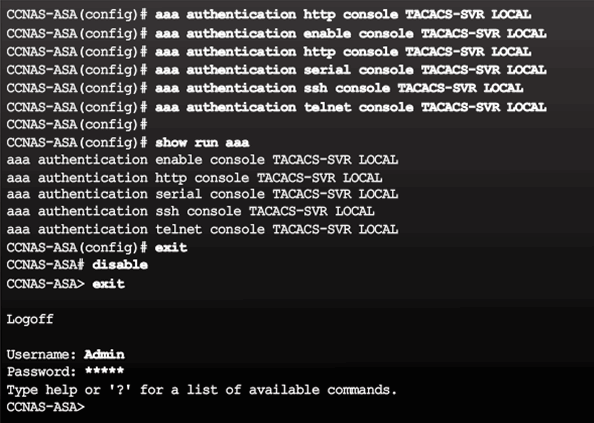

9.2.6.3 AAA Configuration

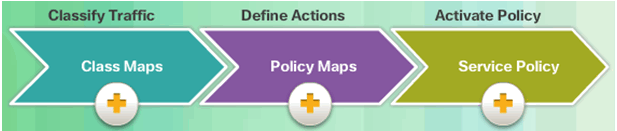

9.2.7 Service Policies on an ASA

9.2.7.1 Overview of MPF

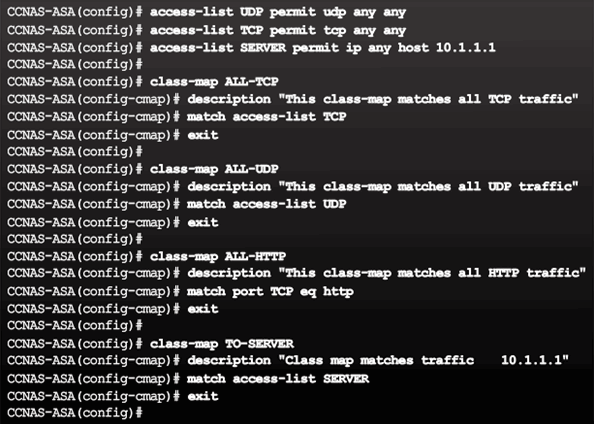

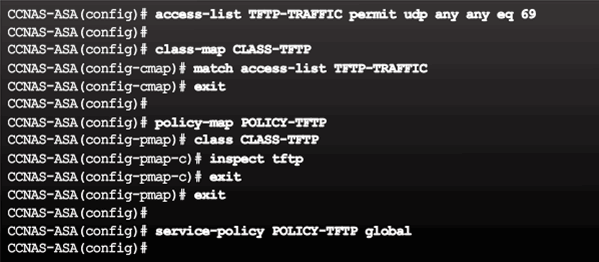

9.2.7.2 Configuring Class Maps

9.2.7.3 Define and Activate a Policy

Implementing Modular Policy Framework

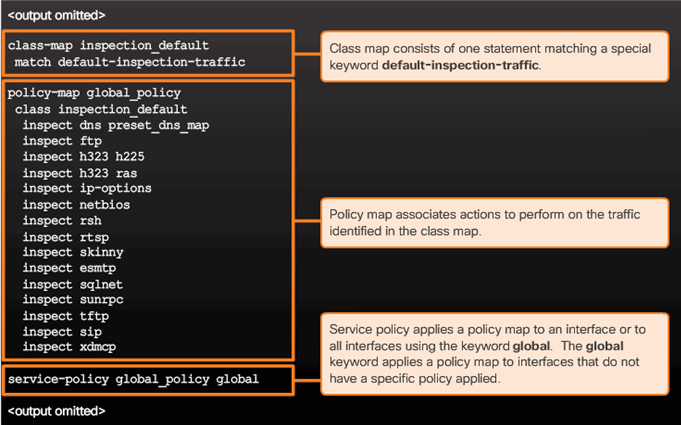

9.2.7.4 ASA Default Policy

Default Service Policy Configuration

9.3 Summary

9.3.1 Conclusion

9.3.1.1 Packet Tracer – Configure ASA Basic Settings and Firewall Using the CLI

9.3.1.1 Packet Tracer – Configure ASA Basic Settings and Firewall Using the CLI

We Need to Talk, Again

Note: It is important that the students have completed the Introductory Modeling Activity for this chapter. This activity works best in medium-sized groups of 6 to 8 students.

The instructor will whisper a complex message to the first student in a group. An example of the message might be “We are expecting a blizzard tomorrow. It should be arriving in the morning, and school will be delayed two hours, so bring your homework.”

That student whispers the message to the next student in the group. The last student of each group whispers the message to a student in the following group. Each group follows this process until all members of each group have heard the whispered message.

Here are the rules you are to follow:

- You can whisper the message in short parts to your neighbor AND you can repeat the message parts after verifying your neighbor heard the correct message.

- Small parts of the message may be checked and repeated (clockwise OR counter-clockwise to ensure accuracy of the message parts) by whispering. A student will be assigned to time the entire activity.

- When the message has reached the end of the group, the last student will say aloud what she or he heard. Small parts of the message may be repeated (i.e., re-sent), and the process can be restarted to ensure that ALL parts of the message are fully delivered and correct.

- The Instructor will restate the original message to check for quality delivery.

TCP and UDP are transport layer protocols instrumental in ensuring that:

- The type of data will affect whether TCP or UDP will be used as the method of delivery.

- Timing is a factor and will affect how long it takes to send/receive TCP/UDP data transmissions.

9.3.1.2 Lab – Configure ASA Basic Settings and Firewall Using the CLI

9.3.1.2 Lab – Configure ASA Basic Settings and Firewall Using the CLI

This simulation activity is intended to provide a foundation for understanding the TCP and UDP in detail. Simulation mode provides the ability to view the functionality of the different protocols.

As data moves through the network, it is broken down into smaller pieces and identified in some fashion so that the pieces can be put back together. Each of these pieces is assigned a specific name (PDU) and associated with a specific layer. Packet Tracer Simulation mode enables the user to view each of the protocols and the associated PDU.

This activity provides an opportunity to explore the functionality of the TCP and UDP protocols, multiplexing and the function of port numbers in determining which local application requested the data or is sending the data.

9.3.1.3 Chapter 9: Implementing the Cisco Adaptive Security Appliance

The transport layer provides transport-related services by:

- Dividing data received from an application into segments

- Adding a header to identify and manage each segment

- Using the header information to reassemble the segments back into application data

- Passing the assembled data to the correct application

UDP and TCP are common transport layer protocols.

UDP datagrams and TCP segments have headers added in front of the data that include a source port number and destination port number. These port numbers enable data to be directed to the correct application running on the destination computer.

TCP does not pass any data to the network until it knows that the destination is ready to receive it. TCP then manages the flow of the data and resends any data segments that are not acknowledged as being received at the destination. TCP uses mechanisms of handshaking, timers, acknowledgment messages, and dynamic windowing to achieve reliability. The reliability process, however, imposes overhead on the network in terms of much larger segment headers and more network traffic between the source and destination.

If the application data needs to be delivered across the network quickly, or if network bandwidth cannot support the overhead of control messages being exchanged between the source and the destination systems, UDP would be the developer’s preferred transport layer protocol. UDP provides none of the TCP reliability features. However, this does not necessarily mean that the communication itself is unreliable; there may be mechanisms in the application layer protocols and services that process lost or delayed datagrams if the application has these requirements.

The application developer decides the transport layer protocol that best meets the requirements for the application. It is important to remember that the other layers all play a part in data network communications and influences its performance.